I'm trying to implement in OpenCV a local normalization algorithm to reduce the difference of illumination in an image. I have found a MATLAB function, and I have implemented it in OpenCV. However, the result that I get is different from the one given by the MATLAB function.

This is my code:

Mat localNorm(Mat image, float sigma1, float sigma2)

{

Mat floatGray, blurred1, blurred2, temp1, temp2, res;

image.convertTo(floatGray, CV_32FC1);

floatGray = floatGray/255.0;

int blur1 = 2*ceil(-NormInv(0.05, 0, sigma1))+1;

cv::GaussianBlur(floatGray, blurred1, cv::Size(blur1,blur1), sigma1);

temp1 = floatGray-blurred1;

cv::pow(temp1, 2.0, temp2);

int blur2 = 2*ceil(-NormInv(0.05, 0, sigma2))+1;

cv::GaussianBlur(temp2, blurred2, cv::Size(blur2,blur2), sigma2);

cv::pow(blurred2, 0.5, temp2);

floatGray = temp1/temp2;

floatGray = 255.0*floatGray;

floatGray.convertTo(res, CV_8UC1);

return res;

}

The function NormInv is the C++ implementation given by Euan Dean in this post.

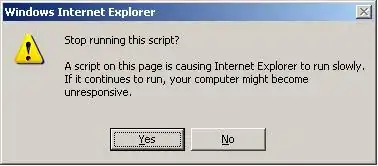

The following shows the result that I am getting and the theoretical result, for the same values of sigma1 and sigma2 (2.0 and 20.0, respectively)

I have tried using different values for sigma1 and sigma2, but none of them seem to work. I have also tried doing blur1=0 and blur2=0 in the Gaussian function but it doesn't work either.

Any help would be appreciated. Thanks in advance.