Andy Hayden mentioned the correct function (to_sql). In this answer, I'll give a complete example, which I tested with Python 3.5 but should also work for Python 2.7 (and Python 3.x):

First, let's create the dataframe:

# Create dataframe

import pandas as pd

import numpy as np

np.random.seed(0)

number_of_samples = 10

frame = pd.DataFrame({

'feature1': np.random.random(number_of_samples),

'feature2': np.random.random(number_of_samples),

'class': np.random.binomial(2, 0.1, size=number_of_samples),

},columns=['feature1','feature2','class'])

print(frame)

Which gives:

feature1 feature2 class

0 0.548814 0.791725 1

1 0.715189 0.528895 0

2 0.602763 0.568045 0

3 0.544883 0.925597 0

4 0.423655 0.071036 0

5 0.645894 0.087129 0

6 0.437587 0.020218 0

7 0.891773 0.832620 1

8 0.963663 0.778157 0

9 0.383442 0.870012 0

To import this dataframe into a MySQL table:

# Import dataframe into MySQL

import sqlalchemy

database_username = 'ENTER USERNAME'

database_password = 'ENTER USERNAME PASSWORD'

database_ip = 'ENTER DATABASE IP'

database_name = 'ENTER DATABASE NAME'

database_connection = sqlalchemy.create_engine('mysql+mysqlconnector://{0}:{1}@{2}/{3}'.

format(database_username, database_password,

database_ip, database_name))

frame.to_sql(con=database_connection, name='table_name_for_df', if_exists='replace')

One trick is that MySQLdb doesn't work with Python 3.x. So instead we use mysqlconnector, which may be installed as follows:

pip install mysql-connector==2.1.4 # version avoids Protobuf error

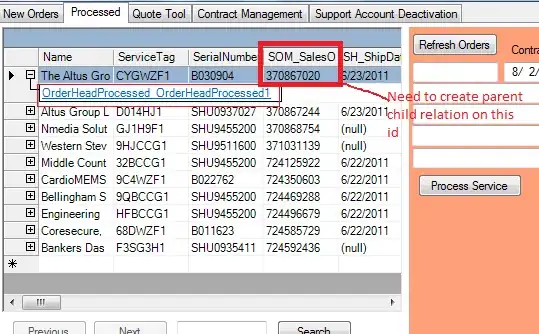

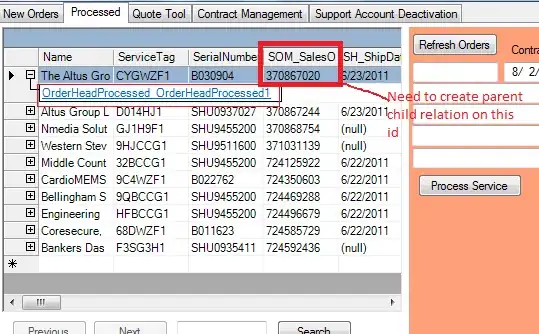

Output:

Note that to_sql creates the table as well as the columns if they do not already exist in the database.