After performing my own benchmarks for loading 61,277,203 lines into memory and shoving values into a Dictionary / ConcurrentDictionary() the results seem to support @dtb's answer above that using the following approach is the fastest:

Parallel.ForEach(File.ReadLines(catalogPath), line =>

{

});

My tests also showed the following:

- File.ReadAllLines() and File.ReadAllLines().AsParallel() appear to run at almost exactly the same speed on a file of this size. Looking at my CPU activity, it appears they both seem to use two out of my 8 cores?

- Reading all the data first using File.ReadAllLines() appears to be much slower than using File.ReadLines() in a Parallel.ForEach() loop.

- I also tried a producer / consumer or MapReduce style pattern where one thread was used to read the data and a second thread was used to process it. This also did not seem to outperform the simple pattern above.

I have included an example of this pattern for reference, since it is not included on this page:

var inputLines = new BlockingCollection<string>();

ConcurrentDictionary<int, int> catalog = new ConcurrentDictionary<int, int>();

var readLines = Task.Factory.StartNew(() =>

{

foreach (var line in File.ReadLines(catalogPath))

inputLines.Add(line);

inputLines.CompleteAdding();

});

var processLines = Task.Factory.StartNew(() =>

{

Parallel.ForEach(inputLines.GetConsumingEnumerable(), line =>

{

string[] lineFields = line.Split('\t');

int genomicId = int.Parse(lineFields[3]);

int taxId = int.Parse(lineFields[0]);

catalog.TryAdd(genomicId, taxId);

});

});

Task.WaitAll(readLines, processLines);

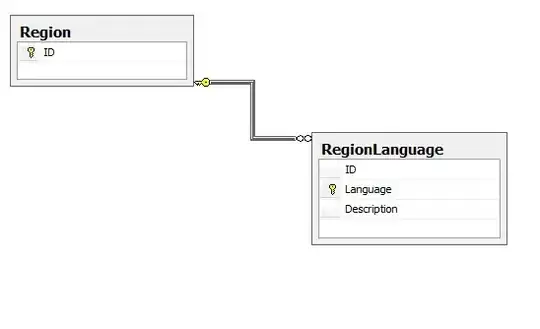

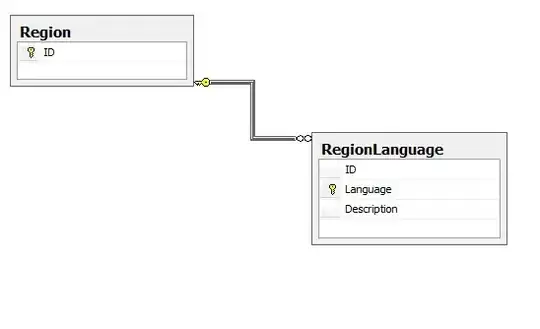

Here are my benchmarks:

I suspect that under certain processing conditions, the producer / consumer pattern might outperform the simple Parallel.ForEach(File.ReadLines()) pattern. However, it did not in this situation.