Maybe I'm doing something odd, but maybe found a surprising performance loss when using numpy, seems consistent regardless of the power used. For instance when x is a random 100x100 array

x = numpy.power(x,3)

is about 60x slower than

x = x*x*x

A plot of the speed up for various array sizes reveals a sweet spot with arrays around size 10k and a consistent 5-10x speed up for other sizes.

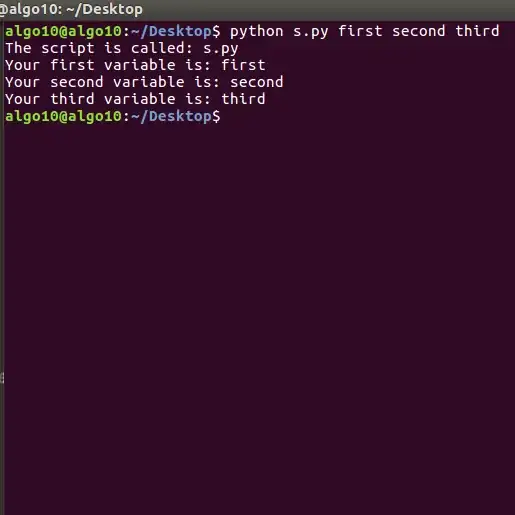

Code to test below on your own machine (a little messy):

import numpy as np

from matplotlib import pyplot as plt

from time import time

ratios = []

sizes = []

for n in np.logspace(1,3,20).astype(int):

a = np.random.randn(n,n)

inline_times = []

for i in range(100):

t = time()

b = a*a*a

inline_times.append(time()-t)

inline_time = np.mean(inline_times)

pow_times = []

for i in range(100):

t = time()

b = np.power(a,3)

pow_times.append(time()-t)

pow_time = np.mean(pow_times)

sizes.append(a.size)

ratios.append(pow_time/inline_time)

plt.plot(sizes,ratios)

plt.title('Performance of inline vs numpy.power')

plt.ylabel('Nx speed-up using inline')

plt.xlabel('Array size')

plt.xscale('log')

plt.show()

Anyone have an explanation?