Making your approach work:

First we fix your original approach:

a = exprnd(1,10000, 1);

x = 0:0.02:10;

count = zeros(size(x)); %%// <= Preallocate the count-vector

for i = 1:length(x);

%%// <= removed the line "count = 0"

for j = 1:length(a);

if (a(j) <= x(i))

count(i) = count(i) + 1; %%// <= Changed count to count(i)

end

end

end

This will make your approach work. If you want to compute this for relatively large vectors a and x, this will be quite slow, as the overall complexity is O(n^2). (Assuming n==length(x)==length(a).)

You could use a different approach for faster runtimes:

Approach of complexity O(n*log(n)) using sort:

Here is an algorithm of complexity O(n*log(n)) instead of O(n^2).

It is based on Matlab's sort being stable and the positions being returned. Assume x is sorted, if you then sort [a(:); x(:)], the new position of x(1) will be 1 plus the number of elements of a smaller than or equal to x(1). The new position of x(2) will be 2 plus the number of elements of a smaller than or equal to x(2). So the number of elements in a that are smaller than x(i) equals the new position of x(i) minus i.

function aSmallerThanxMat = aSmallerThanx(a, x)

%%// Remember dimension of x

dimX = size(x);

%%// Sort x and remember original ordering Ix

[xsorted, Ix] = sort(x(:));

%%// How many as are smaller than sortedX

[~,Iaxsorted] = sort([a(:); xsorted(:)]);

Iaxsortedinv(Iaxsorted) = 1:numel(Iaxsorted);

aSmallerThanSortedx = Iaxsortedinv(numel(a)+1:end)-(1:numel(xsorted));

%%// Get original ordering of x back

aSmallerThanx(Ix) = aSmallerThanSortedx;

%%// Reshape x to original array size

aSmallerThanxMat = reshape(aSmallerThanx, dimX);

This approach might be a bit more difficult to grasp, but for large vectors, you will get a considerable speedup.

Similar approach using sort and for:

The concept of this approach is very similar, but more traditional using loops:

First we sort x and a. Then we step through the x(i_x). If x(i_x) it is larger than the current a(i_a), we increment i_a. If it is smaller than the current i_a, then i_a-1 is the number of elements in a that are smaller or equal to x(i_x).

function aSmallerThanx = aSmallerThanx(a, x)

asorted = sort(a(:));

[xsorted, Ix] = sort(x(:));

aSmallerThanx = zeros(size(x));

i_a = 1;

for i_x = 1:numel(xsorted)

for i_a = i_a:numel(asorted)+1

if i_a>numel(asorted) || xsorted(i_x)<asorted(i_a)

aSmallerThanx(Ix(i_x)) = i_a-1;

break

end

end

end

Approach using histc:

This one is even better: It creates bins in between the values of x, counts the values of a, that fall into each bin, then sums them up beginning from the left.

function result = aSmallerThanx(a, x)

[xsorted, Ix] = sort(x(:));

bincounts = histc(a, [-Inf; xsorted]);

result(Ix) = cumsum(bincounts(1:end-1));

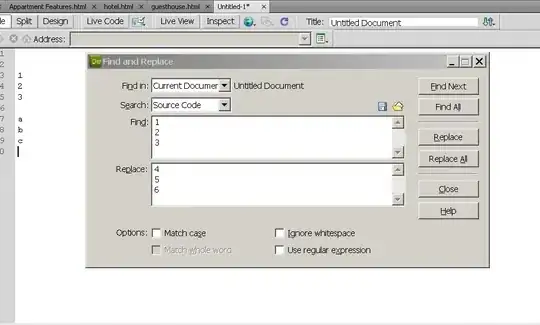

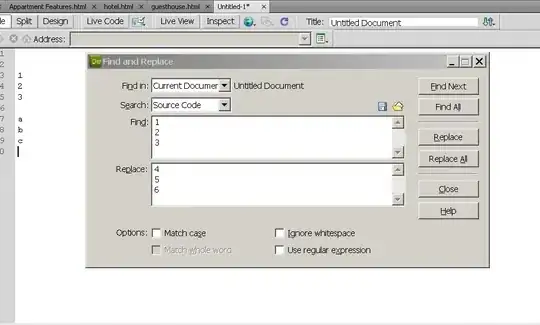

Comparison:

Here is a runtime comparison of your approach, Ander Biguri's for+sum loop approach, mehmet's bsxfun approach, the two approaches using sort and the histc approach:

For vectors of length 16384, the histc approach is 2300 times faster than the original approach.