I'm merging the videos using AVMutableComposition with the below code,

- (void)MergeAndSave_internal{

AVMutableComposition *composition = [AVMutableComposition composition];

AVMutableCompositionTrack *compositionVideoTrack = [composition addMutableTrackWithMediaType:AVMediaTypeVideo preferredTrackID:kCMPersistentTrackID_Invalid];

AVMutableCompositionTrack *compositionAudioTrack = [composition addMutableTrackWithMediaType:AVMediaTypeAudio preferredTrackID:kCMPersistentTrackID_Invalid];

AVMutableVideoComposition *videoComposition = [AVMutableVideoComposition videoComposition];

videoComposition.frameDuration = CMTimeMake(1,30);

videoComposition.renderScale = 1.0;

AVMutableVideoCompositionInstruction *instruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

AVMutableVideoCompositionLayerInstruction *layerInstruction = [AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:compositionVideoTrack];

NSLog(@"%@",videoPathArray);

float time = 0;

CMTime startTime = kCMTimeZero;

for (int i = 0; i<videoPathArray.count; i++) {

AVURLAsset *sourceAsset = [AVURLAsset URLAssetWithURL:[NSURL fileURLWithPath:[videoPathArray objectAtIndex:i]] options:[NSDictionary dictionaryWithObject:[NSNumber numberWithBool:YES] forKey:AVURLAssetPreferPreciseDurationAndTimingKey]];

NSError *error = nil;

BOOL ok = NO;

AVAssetTrack *sourceVideoTrack = [[sourceAsset tracksWithMediaType:AVMediaTypeVideo] objectAtIndex:0];

AVAssetTrack *sourceAudioTrack = [[sourceAsset tracksWithMediaType:AVMediaTypeAudio] objectAtIndex:0];

CGSize temp = CGSizeApplyAffineTransform(sourceVideoTrack.naturalSize, sourceVideoTrack.preferredTransform);

CGSize size = CGSizeMake(fabsf(temp.width), fabsf(temp.height));

CGAffineTransform transform = sourceVideoTrack.preferredTransform;

videoComposition.renderSize = sourceVideoTrack.naturalSize;

if (size.width > size.height) {

[layerInstruction setTransform:transform atTime:CMTimeMakeWithSeconds(time, 30)];

} else {

float s = size.width/size.height;

CGAffineTransform newe = CGAffineTransformConcat(transform, CGAffineTransformMakeScale(s,s));

float x = (size.height - size.width*s)/2;

CGAffineTransform newer = CGAffineTransformConcat(newe, CGAffineTransformMakeTranslation(x, 0));

[layerInstruction setTransform:newer atTime:CMTimeMakeWithSeconds(time, 30)];

}

if(i==0){

[compositionVideoTrack setPreferredTransform:sourceVideoTrack.preferredTransform];

}

ok = [compositionVideoTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero, [sourceAsset duration]) ofTrack:sourceVideoTrack atTime:startTime error:&error];

ok = [compositionAudioTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero, [sourceAsset duration]) ofTrack:sourceAudioTrack atTime:startTime error:nil];

if (!ok) {

{

[radialView4 setHidden:YES];

NSLog(@"Export failed: %@", [[self.exportSession error] localizedDescription]);

UIAlertView *alert = [[UIAlertView alloc] initWithTitle:@"Error" message:@"Something Went Wrong :(" delegate:nil cancelButtonTitle:@"Ok" otherButtonTitles: nil, nil];

[alert show];

[radialView4 setHidden:YES];

break;

}

}

startTime = CMTimeAdd(startTime, [sourceAsset duration]);

}

instruction.layerInstructions = [NSArray arrayWithObject:layerInstruction];

instruction.timeRange = compositionVideoTrack.timeRange;

videoComposition.instructions = [NSArray arrayWithObject:instruction];

NSArray *paths = NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES);

NSString *documentsDirectory = [paths objectAtIndex:0];

NSString *myPathDocs = [documentsDirectory stringByAppendingPathComponent:

[NSString stringWithFormat:@"RampMergedVideo.mov"]];

unlink([myPathDocs UTF8String]);

NSURL *url = [NSURL fileURLWithPath:myPathDocs];

AVAssetExportSession *exporter = [[AVAssetExportSession alloc] initWithAsset:composition

presetName:AVAssetExportPreset1280x720];

exporter.outputURL=url;

exporter.outputFileType = AVFileTypeQuickTimeMovie;

exporter.shouldOptimizeForNetworkUse = YES;

[exporter exportAsynchronouslyWithCompletionHandler:^{

dispatch_async(dispatch_get_main_queue(), ^{

switch ([exporter status]) {

case AVAssetExportSessionStatusFailed:

NSLog(@"Export failed: %@", [exporter error]);

break;

case AVAssetExportSessionStatusCancelled:

NSLog(@"Export canceled");

break;

case AVAssetExportSessionStatusCompleted:{

NSLog(@"Export successfully");

}

default:

break;

}

if (exporter.status != AVAssetExportSessionStatusCompleted){

NSLog(@"Retry export");

}

});

}];

}

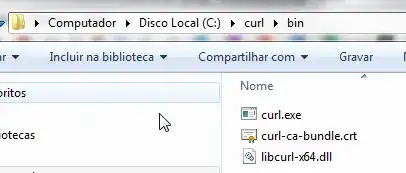

But video looks cracked while saving to system and playing in quick time player. I think that the problem in CFAffline transform. Can anyone please advice ?

Here's the cracked screen in the middle of the video :