After researching and a lot of trials-and-errors, I have come to a point that I can construct a spectrogram which I think it has element of rights and wrongs.

1. First, I read .wav file into a byte array and extract only the data part.

2. I convert the byte array into a double array which takes the average of right and left channels. I also notice that 1 sample of 1 channel consists of 2 bytes. So, 4 bytes into 1 double.

3. For a certain window size of power of 2, I apply FFT from here and get the amplitude in frequency domain. This is a vertical strip of the spectrogram image.

4. I do this repeatedly with the same window size and overlapping for the whole data and obtain the spectrogram.

The following is the code for read .wav into double array

import java.io.IOException;

import java.nio.ByteBuffer;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

import java.util.Arrays;

public class readWAV2Array {

private byte[] entireFileData;

//SR = sampling rate

public double getSR(){

ByteBuffer wrapped = ByteBuffer.wrap(Arrays.copyOfRange(entireFileData, 24, 28)); // big-endian by default

double SR = wrapped.order(java.nio.ByteOrder.LITTLE_ENDIAN).getInt();

return SR;

}

public readWAV2Array(String filepath, boolean print_info) throws IOException{

Path path = Paths.get(filepath);

this.entireFileData = Files.readAllBytes(path);

if (print_info){

//extract format

String format = new String(Arrays.copyOfRange(entireFileData, 8, 12), "UTF-8");

//extract number of channels

int noOfChannels = entireFileData[22];

String noOfChannels_str;

if (noOfChannels == 2)

noOfChannels_str = "2 (stereo)";

else if (noOfChannels == 1)

noOfChannels_str = "1 (mono)";

else

noOfChannels_str = noOfChannels + "(more than 2 channels)";

//extract sampling rate (SR)

int SR = (int) this.getSR();

//extract Bit Per Second (BPS/Bit depth)

int BPS = entireFileData[34];

System.out.println("---------------------------------------------------");

System.out.println("File path: " + filepath);

System.out.println("File format: " + format);

System.out.println("Number of channels: " + noOfChannels_str);

System.out.println("Sampling rate: " + SR);

System.out.println("Bit depth: " + BPS);

System.out.println("---------------------------------------------------");

}

}

public double[] getByteArray (){

byte[] data_raw = Arrays.copyOfRange(entireFileData, 44, entireFileData.length);

int totalLength = data_raw.length;

//declare double array for mono

int new_length = totalLength/4;

double[] data_mono = new double[new_length];

double left, right;

for (int i = 0; i < new_length; i++){

left = ((data_raw[i] & 0xff) << 8) | (data_raw[i+1] & 0xff);

right = ((data_raw[i+2] & 0xff) << 8) | (data_raw[i+3] & 0xff);

data_mono[i] = (left+right)/2.0;

}

return data_mono;

}

}

The following code is the main program to run

import java.awt.Color;

import java.awt.image.BufferedImage;

import java.io.File;

import java.io.IOException;

import java.util.Arrays;

import javax.imageio.ImageIO;

public class App {

public static Color getColor(double power) {

double H = power * 0.4; // Hue (note 0.4 = Green, see huge chart below)

double S = 1.0; // Saturation

double B = 1.0; // Brightness

return Color.getHSBColor((float)H, (float)S, (float)B);

}

public static void main(String[] args) {

// TODO Auto-generated method stub

String filepath = "audio_work/Sine_Sweep_Full_Spectrum_20_Hz_20_kHz_audiocheck.wav";

try {

//get raw double array containing .WAV data

readWAV2Array audioTest = new readWAV2Array(filepath, true);

double[] rawData = audioTest.getByteArray();

int length = rawData.length;

//initialize parameters for FFT

int WS = 2048; //WS = window size

int OF = 8; //OF = overlap factor

int windowStep = WS/OF;

//calculate FFT parameters

double SR = audioTest.getSR();

double time_resolution = WS/SR;

double frequency_resolution = SR/WS;

double highest_detectable_frequency = SR/2.0;

double lowest_detectable_frequency = 5.0*SR/WS;

System.out.println("time_resolution: " + time_resolution*1000 + " ms");

System.out.println("frequency_resolution: " + frequency_resolution + " Hz");

System.out.println("highest_detectable_frequency: " + highest_detectable_frequency + " Hz");

System.out.println("lowest_detectable_frequency: " + lowest_detectable_frequency + " Hz");

//initialize plotData array

int nX = (length-WS)/windowStep;

int nY = WS;

double[][] plotData = new double[nX][nY];

//apply FFT and find MAX and MIN amplitudes

double maxAmp = Double.MIN_VALUE;

double minAmp = Double.MAX_VALUE;

double amp_square;

double[] inputImag = new double[length];

for (int i = 0; i < nX; i++){

Arrays.fill(inputImag, 0.0);

double[] WS_array = FFT.fft(Arrays.copyOfRange(rawData, i*windowStep, i*windowStep+WS), inputImag, true);

for (int j = 0; j < nY; j++){

amp_square = (WS_array[2*j]*WS_array[2*j]) + (WS_array[2*j+1]*WS_array[2*j+1]);

if (amp_square == 0.0){

plotData[i][j] = amp_square;

}

else{

plotData[i][j] = 10 * Math.log10(amp_square);

}

//find MAX and MIN amplitude

if (plotData[i][j] > maxAmp)

maxAmp = plotData[i][j];

else if (plotData[i][j] < minAmp)

minAmp = plotData[i][j];

}

}

System.out.println("---------------------------------------------------");

System.out.println("Maximum amplitude: " + maxAmp);

System.out.println("Minimum amplitude: " + minAmp);

System.out.println("---------------------------------------------------");

//Normalization

double diff = maxAmp - minAmp;

for (int i = 0; i < nX; i++){

for (int j = 0; j < nY; j++){

plotData[i][j] = (plotData[i][j]-minAmp)/diff;

}

}

//plot image

BufferedImage theImage = new BufferedImage(nX, nY, BufferedImage.TYPE_INT_RGB);

double ratio;

for(int x = 0; x<nX; x++){

for(int y = 0; y<nY; y++){

ratio = plotData[x][y];

//theImage.setRGB(x, y, new Color(red, green, 0).getRGB());

Color newColor = getColor(1.0-ratio);

theImage.setRGB(x, y, newColor.getRGB());

}

}

File outputfile = new File("saved.png");

ImageIO.write(theImage, "png", outputfile);

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}

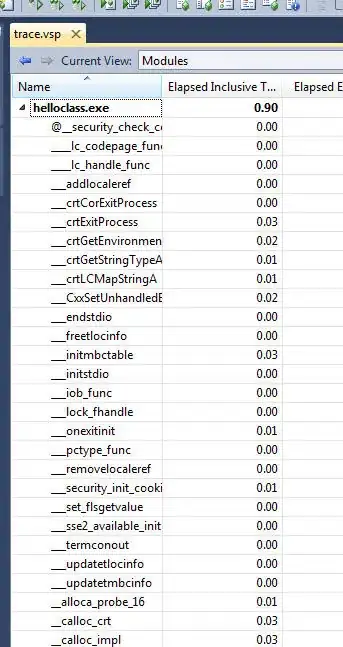

However, the image I obtain from .wav playing sweeping sound from 20-20kHz is like this:

The color show the intensity of sound red(High)-->green(Low)

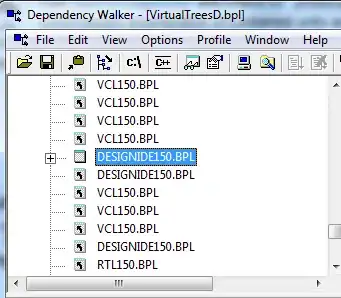

By right, it should look something like the picture below:

I would really appreciate if I can get any correct/improvement/suggest on my project. Thank you in advance for commenting on my question.