A mountain of text files (of types A, B and C) is sitting on my chest, slowly, coldly refusing me desperately needed air. Over the years each type spec has had enhancements such that yesterday's typeA file has many more properties than last year's typeA. To build a parser that can handle the decade's long evolution of these file types it makes sense to inspect all 14 million of them iteratively, calmly, but before dying beneath their crushing weight.

I built a running counter such that every time I see properties (familiar or not) I add 1 to its tally. The sqlite tally board looks like this:

In the special event I see an unfamiliar property I add them to the tally. On a typeA file that looks like:

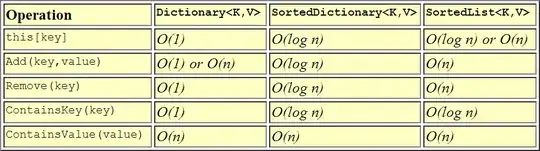

I've got this system down! But it's slow @ 3M files/36 hours in one process. Originally I was using this trick to pass sqlite a list of properties needing increment.

placeholder= '?' # For SQLite. See DBAPI paramstyle.

placeholders= ', '.join(placeholder for dummy_var in properties)

sql = """UPDATE tally_board

SET %s = %s + 1

WHERE property IN (%s)""" %(type_name, type_name, placeholders)

cursor.execute(sql, properties)

I learned that's a bad idea because

sqlitestring search is much slower than indexed search- several hundreds of properties (some 160 characters long) make for really long sql queries

- using

%sinstead of?is bad security practice... (not a concern ATM)

A "fix" was to maintain a script side property-rowid hash of the tally used in this loop:

- Read file for

new_properties - Read

tally_boardforrowid,property - Generate script side

client_hashfrom 2's read - Write rows to

tally_boardfor everynew_propertynot inproperty(nothing incremented yet). Updateclient_hashwith new properties - Lookup

rowidfor every row innew_propertiesusing theclient_hash - Write increment to every

rowid(now a proxy forproperty) totally_board

Step 6. looks like

sql = """UPDATE tally_board

SET %s = %s + 1

WHERE rowid IN %s""" %(type_name, type_name, tuple(target_rows))

cur.execute

The problem with this is

- It's still slow!

- It manifests a race condition in parallel processing that introduces duplicates in the

propertycolumn whenever threadA starts step 2 right before threadB completes step 6.

A solution to the race condition is to give steps 2-6 an exclusive lock on the db though it doesn't look like reads can get those Lock A Read.

Another attempt uses a genuine UPSERT to increment preexisting property rows AND insert (and increment) new property rows in one fell swoop.

There may be luck in something like this but I'm unsure how to rewrite it to increment the tally.