I am currently an undergrad in CS, and I am continually amazed with how powerful python is. I recently did a small experiment to test the cost of forming lists with comprehension versus a standalone function. For example:

def make_list_of_size(n):

retList = []

for i in range(n):

retList.append(0)

return retList

creates a list of size n containing zeros.

It is well known that this function is O(n). I wanted to explore the growth of the following:

def comprehension(n):

return [0 for i in range(n)]

Which makes the same list.

let us explore!

This is the code I used for timing, and note the order of function calls (which way did I make the list first). I made the list with a standalone function first, and then with comprehension. I have yet to learn how to turn off garbage collection for this experiment, so, there is some inherent measurement error, brought about when garbage collection kicks in.

'''

file: listComp.py

purpose: to test the cost of making a list with comprehension

versus a standalone function

'''

import time as T

def get_overhead(n):

tic = T.time()

for i in range(n):

pass

toc = T.time()

return toc - tic

def make_list_of_size(n):

aList = [] #<-- O(1)

for i in range(n): #<-- O(n)

aList.append(n) #<-- O(1)

return aList #<-- O(1)

def comprehension(n):

return [n for i in range(n)] #<-- O(?)

def do_test(size_i,size_f,niter,file):

delta = 100

size = size_i

while size <= size_f:

overhead = get_overhead(niter)

reg_tic = T.time()

for i in range(niter):

reg_list = make_list_of_size(size)

reg_toc = T.time()

comp_tic = T.time()

for i in range(niter):

comp_list = comprehension(size)

comp_toc = T.time()

#--------------------

reg_cost_per_iter = (reg_toc - reg_tic - overhead)/niter

comp_cost_pet_iter = (comp_toc - comp_tic - overhead)/niter

file.write(str(size)+","+str(reg_cost_per_iter)+

","+str(comp_cost_pet_iter)+"\n")

print("SIZE: "+str(size)+ " REG_COST = "+str(reg_cost_per_iter)+

" COMP_COST = "+str(comp_cost_pet_iter))

if size == 10*delta:

delta *= 10

size += delta

def main():

fname = input()

file = open(fname,'w')

do_test(100,1000000,2500,file)

file.close()

main()

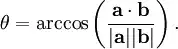

I did three tests. Two of them were up to list size 100000, the third was up to 1*10^6

See Plots:

Overlay with NO ZOOM

I found these results to be intriguing. Although both methods have a big-O notation of O(n), the cost, with respect to time, is less for comprehension for making the same list.

I have more information to share, including the same test done with the list made with comprehension first, and then with the standalone function.

I have yet to run a test without garbage collection.