I try to convert 4:3 frame to 16:9 frame in the video capture pipeline. And converted frame would need further processing. Therefore I need to keep covered frame as CVImageBufferRef.

I looked at this stack overflow thread, and borrow some idea from it

iOS - Scale and crop CMSampleBufferRef/CVImageBufferRef

here is what I did:

int cropX0 = 0, cropY0 = 60, cropHeight = 360, cropWidth = 640, outWidth = 640, outHeight = 360;

//get CVPixel buffer from CMSampleBuffer

CVPixelBufferRef imageBuffer = CMSampleBufferGetImageBuffer(cmSampleBuffer);

CVPixelBufferLockBaseAddress(imageBuffer,0);

void *baseAddress = CVPixelBufferGetBaseAddress(imageBuffer);

size_t bytesPerRow = CVPixelBufferGetBytesPerRow(imageBuffer);

size_t startpos = cropY0 * bytesPerRow;

void* cropStartAddr = ((char*)baseAddress) + startpos;

CVPixelBufferRef cropPixelBuffer = NULL;

int status = CVPixelBufferCreateWithBytes(kCFAllocatorDefault,

outWidth,

outHeight,

CVPixelBufferGetPixelFormatType(imageBuffer),

cropStartAddr,

bytesPerRow,

NULL,

0,

NULL,

&cropPixelBuffer);

if(status == 0){

OSStatus result = 0;

}

But after this method. if we inspect the cropPixelBuffer from Xcode. it looks like a corrupt image.

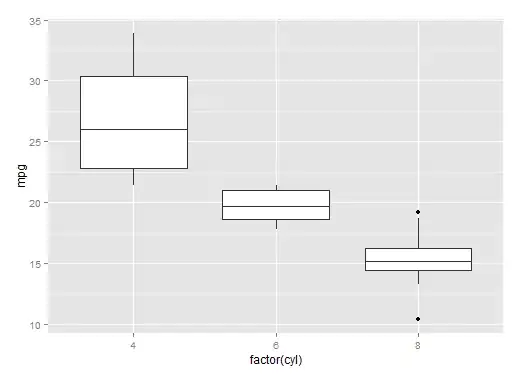

The original picture looks like this

The original picture looks like this

I think it could be because of the color format is NV12 yuv pixel format Thanks for your help