I am trying to estimate an extreme gradiant boosting model using (xgboost) package.

Before writing this question, I looked for infos both on stackoverflow and elsewhere, but the informations that I found did not solve my problem.

Those are the posts that are similar to this that I found, but they have not been solved in a clear way:

human readable rules from xgboost in R

Why is xgboost not plotting my trees?

My problem is with the function xgb.plot.tree, I built my extreme gradiant boosting model with xgboost() function, I created matrix that shows importance of features in a model with xgb.importance(), but when I try to plot a boosted tree model, the function crashes.

Below my R-code:

model_xgb <- xgboost(data=dtrain$data,

label=dtrain$label,

booster="gbtree",

max_depth=6,

eta=0.3,

nthread=2,

nrounds = 150,

verbose=0,

eval_metrics=list("error"),

objective="binary:logistic")

# Calculate feature importance

imp <- xgb.importance(feature_names=dtrain$data@Dimnames[[2]],

model=model_xgb)

print(imp)

# Display tree number 1

xgb.plot.tree(feature_names=dtrain$data@Dimnames[[2]],

model=model_xgb,

n_first_tree=1)

According with the answer of @ArriveW I added the function xgb.dump() and I modified my code as below:

dump_xgb <- xgb.dump(model = model_xgb)

xgb.plot.tree(feature_names=dtrain$data@Dimnames[[2]],

model=model_xgb,

n_first_tree=5)

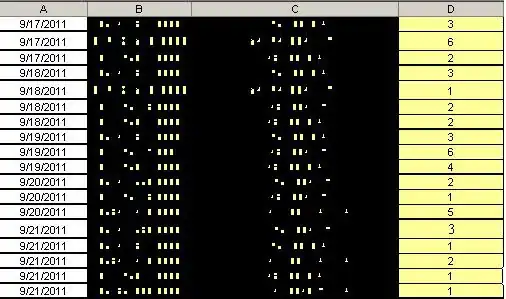

But despite this, I do not get a chart and this is the result

On github you can find the reproducible example:

Any suggestion please?