It’s more complicated than that. While it’s true that a Timestamp is a point in time, it also tends to have a dual nature where it sometimes pretends to be a date and time of day instead.

BTW, you probably already know, the Timestamp class is poorly designed and long outdated. Best if you can avoid it completely. If you are getting a Timestamp from a legacy API, you are doing the right thing: immediately converting it to a type from java.time, the modern Java date and time API.

Timestamp is a point in time

To convert a point in time (however represented) to a date you need to decide on a time zone. It is never the same date in all time zones. So the choice of time zone will always make a difference. So one correct conversion would be:

ZoneId zone = ZoneId.of("Africa/Cairo");

LocalDate date = timestamp.toInstant().atZone(zone).toLocalDate();

The Timestamp class was designed for use with your SQL database. If your datatype in SQL is timestamp with time zone, then it unambiguously denotes a point in time, and you need to see it as a point in time as just described. Even when to most database engines timestamp with time zone really just means “timestamp in UTC”, it’s still a point in time.

And then again: sometimes to be thought of as date and time of day

From the documentation of Timestamp:

A Timestamp also provides formatting and parsing operations to support

the JDBC escape syntax for timestamp values.

The JDBC escape syntax is defined as

yyyy-mm-dd hh:mm:ss.fffffffff, where fffffffff indicates

nanoseconds.

This doesn’t define any point in time. It’s a mere date and time of day. What the documentation doesn’t even tell you is that the date and time of day is understood in the default time zone of the JVM.

I suppose that the reason for seeing a Timestamp in this way comes from the SQL Timestamp datatype. In most database engines this is a date and time without time zone. It’s not a timestamp, despite the name! It doesn’t define a point in time, which is the purpose of and is in the definition of timestamp.

I have seen a number of cases where the Timestamp prints the same date and time as in the database, but doesn’t represent the point in time implied in the database. For example, there may be a decision that “timestamps” in the database are in UTC, while the JVM uses the time zone of the place where it’s running. It’s a bad practice, but it is not one that will go away within a few years.

This must also have been the reason why Timestamp was fitted with the toLocalDateTime method that you used in the question. It gives you that date and time that were in the database, right? So in this case your conversion in the question ought to be correct, or…?

Where this can fail miserably without us having a chance to notice is, as others have mentioned already, when the default time zone of the JVM is changed. The JVM’s default time zone can be changed at any time from any place in your program or any other program running in the same JVM. When this happens, your Timestamp objects don’t change their point in time, but they do tacitly change their time of day, sometimes also their date. I’ve read horror stories — in Stack Overflow questions and elsewhere — about the wrong results and the confusion coming out of this.

Solution: don’t use Timestamp

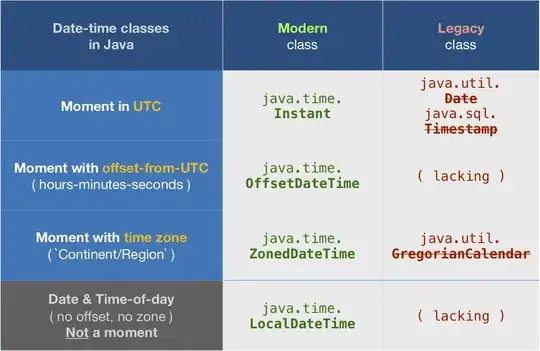

Since JDBC 4.2 you can retrieve java.time types out of your SQL database. If your SQL datatype is timestamp with time zone (recommended for timestamps), fetch an OffsetDateTime. Some JDBC drivers also let you fetch an Instant, that’s fine too. In both cases no time zone change will play any trick on you. If the SQL type is timestamp without time zone (discouraged and all too common), fetch a LocalDateTime. Again you can be sure that your object doesn’t change its date and time no matter if the JVM time zone setting changes. Only your LocalDateTime never defined a point in time. Conversion to LocalDate is trivial, as you have already demonstrated in the question.

Links