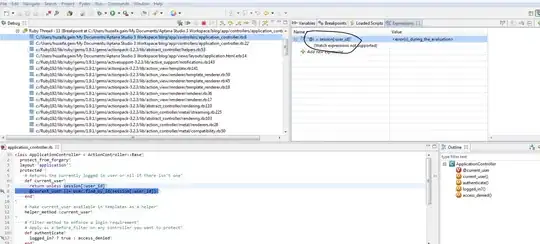

I recently came across openAI 5. I was curious to see how their model is built and understand it. I read in wikipedia that it "contains a single layer with a 1024-unit LSTM". Then I found this pdf containing a scheme of the architecture.

My Questions

From all this I don't understand a few things:

What does it mean to have a 1024-unit LSTM layer? Does this mean we have 1024 time steps with a single LSTM cell, or does this mean we have 1024 cells. Could you show me some kind of graph visualizing this? I'm especially having a hard time visualizing 1024 cells in one layer. (I tried looking at several SO questions such as 1, 2, or the openAI 5 blog, but they didn't help much).

How can you do reinforcement learning on such model? I'm used to RL being used with Q-Tables and them being updated during training. Does this simply mean that their loss function is the reward?

How come such large model doesn't suffer from vanishing gradients or something? Haven't seen in the pdf any types of normalizations or so.

In the pdf you can see a blue rectangle, seems like it's a unit and there are

Nof those. What does this mean? And correct me please if I'm mistaken, the pink boxes are used to select the best move/item(?)

In general all of this can be summarized to "how does the openAI 5 model work?