I found the irr package has 2 big bugs for the calculation of weighted kappa.

Please tell me if the 2 bugs are really there or I misunderstood someting.

You can replicate the bugs using the following examples.

First bug: The sort of labels in confusion matrix needs to be corrected.

I have 2 pairs of scores for disease extent (from 0 to 100 while 0 is healthy, 100 is extremely ill).

In label_test.csv (you can just copy and paste the data to your disk to do the following test):

0

1

1

1

0

14

53

3

In pred_test.csv:

0

1

1

0

3

4

54

6

in script_r.R:

library(irr)

label <- read.csv('label_test.csv',header=FALSE)

pred <- read.csv('pred_test.csv',header=FALSE)

kapp <- kappa2(data.frame(label,pred),"unweighted")

kappa <- getElement(kapp,"value")

print(kappa) # output: 0.245283

w_kapp <- kappa2(data.frame(label,pred),"equal")

weighted_kappa <- getElement(w_kapp,"value")

print(weighted_kappa) # output: 0.443038

When I use Python to calculate the kappa and weighted_kappa, in script_python.py:

from sklearn.metrics import cohen_kappa_score

label = pd.read_csv(label_file, header=None).to_numpy()

pred = pd.read_csv(pred_file, header=None).to_numpy()

kappa = cohen_kappa_score(label.astype(int), pred.astype(int))

print(kappa) # output: 0.24528301886792447

weighted_kappa = cohen_kappa_score(label.astype(int), pred.astype(int), weights='linear', labels=np.array(list(range(100))) )

print(weighted_kappa) # output: 0.8359908883826879

We can find that the kappa calculated by R and Python is the same, but the weighted_kappa from R is far lower than the weighted_kappa in sklearn from Python. Which is wrong? After 2-day research, I found that the weighted_kappa from irr package in R is wrong. Details are as follows.

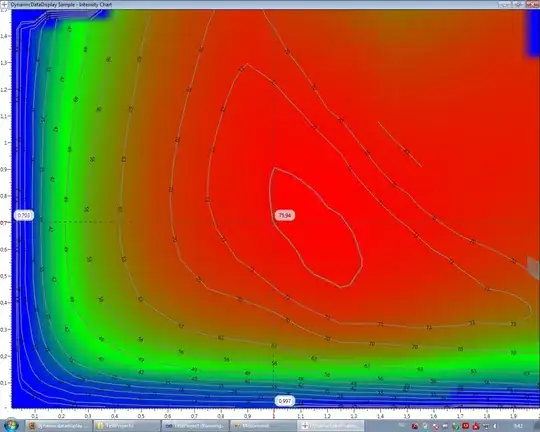

During the debuging, we will find the confusion matrix in irr from R is:

We can find that the order is wrong. The order of labels should be changed from [0, 1, 14, 3, 4, 53, 54, 6] to [0, 1, 3, 4, 6, 14, 53, 54] as it is in Python. It seems that irr package used a string-based sort method instead of integer-based sort method, which will put 14 to the front of 3. This mistake could be and should be corrected easily.

Second bug: The confusion matrix is not complete in R

In my pred_test.csv and label_test.csv, the values can not cover all possible values from 0 to 100. So the default confusion matrix in irr from R will miss those values which does not appear in data. This should be fixed.

Let's see another example.

In pred_test.csv, let's change the label from 54 to 99. Then, we run script_r.R and script_python.py again. The results are:

In R:

kappa: 0.245283

weighted_kappa: 0.443038

In Python:

kappa: 0.24528301886792447

weighted_kappa: 0.592891760904685

We can find the weighted_kappa from irr in R is unchanged at all. But the weighted_kappa from sklearn in Python is decreased from 0.83 to 0.59. So we know irr made a mistake again.

The reason is that sklearn can let us to pass the full labels to the confusion matrix so that the confusion matrix shape will be 100 * 100, however in irr, the labels of confusion matrix is calculated from the unique values from label and pred, which will miss a lot of other possible values. This mistake will assign the same weight to 53 and 99 here. So it is better to provide an option in irr package to let custumer provide the custum labels like what they have done in sklearn from Python.