I am trying to understand the usage and benefit of the “cudaOccupancyMaxActiveBlocksPerMultiprocessor” method.

I am using a slightly modified version of the sample program present on NVIDIA developer forum. Basically, I am asking the user to provide the size of the array.

My GPU: NVIDIA GeForce GTX 1070

QUESTIONS:

- The occupancy values returned by the program are very random. Many times, the program returns different occupancy values for the same input array size, is there anything wrong in the program?

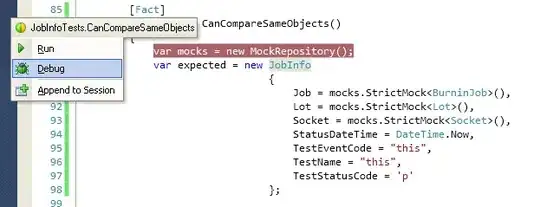

- As shown in the screenshot, if user passed the array size=512 then, the occupancy value is “13” whereas if I set N=512 directly in the program then the occupancy value is “47”. Why?

- Why does user provided array size=1024 has occupancy value =0?

SAMPLE CODE:

Source.cpp

#include "kernel_header.cuh"

#include <algorithm>

#include <iostream>

using namespace std;

int main(int argc, char* argv[])

{

int N;

int userSize = 0;

//ask size to user

cout << "\n\nType the size of 1D Array: " << endl;

cin >> userSize;

N = userSize>0? userSize : 1024; //<<<<<<<<<<<<<<<-------PROBLEM

int* array = (int*)calloc(N, sizeof(int));

for (int i = 0; i < N; i++)

{

array[i] = i + 1;

//cout << "i = " << i << " is " << array[i]<<endl;

}

launchMyKernel(array, N);

free(array);

return 0;

}

kernel_header.cuh

#ifndef KERNELHEADER

#define KERNELHEADER

void launchMyKernel(int* array, int arrayCount);

#endif

kernel.cu

#include "stdio.h"

#include "cuda_runtime.h"

__global__ void MyKernel(int* array, int arrayCount)

{

int idx = threadIdx.x + blockIdx.x * blockDim.x;

if (idx < arrayCount)

{

array[idx] *= array[idx];

}

}

void launchMyKernel(int* array, int arrayCount)

{

int blockSize; // The launch configurator returned block size

int minGridSize; // The minimum grid size needed to achieve the

// maximum occupancy for a full device launch

int gridSize; // The actual grid size needed, based on input size

cudaOccupancyMaxPotentialBlockSize(&minGridSize, &blockSize,MyKernel, 0, 0);

// Round up according to array size

gridSize = (arrayCount + blockSize - 1) / blockSize;

MyKernel << < gridSize, blockSize >> > (array, arrayCount);

cudaDeviceSynchronize();

// calculate theoretical occupancy

int maxActiveBlocks;

cudaOccupancyMaxActiveBlocksPerMultiprocessor(&maxActiveBlocks,

MyKernel, blockSize,

0);

int device;

cudaDeviceProp props;

cudaGetDevice(&device);

cudaGetDeviceProperties(&props, device);

float occupancy = (maxActiveBlocks * blockSize / props.warpSize) /

(float)(props.maxThreadsPerMultiProcessor /

props.warpSize);

printf("\n\nMax. Active blocks found: %d\nOur Kernel block size decided: %d\nWarp Size: %d\nNumber of threads per SM: %d\n\n\n\n", maxActiveBlocks

, blockSize,

props.warpSize,

props.maxThreadsPerMultiProcessor);

printf("Launched blocks of size %d. Theoretical occupancy: %f\n",

blockSize, occupancy);

}