I am in charge of improving the performance of our application. I'm now at the point where I'm considering trying to making certain things run in parallel.

If it can help: we use Postgres as our DB, and EclipseLink is our JPA Provider.

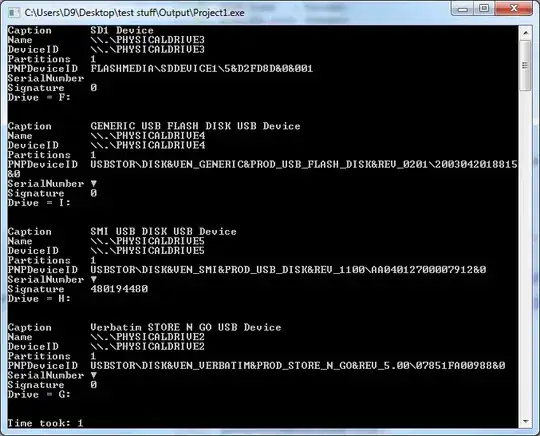

This is a snapshot of a request's execution (against our PUT /something endpoint) as visualized through Dynatrace:

Each yellow bar represents an SQL query's running time in the DB.

Some of those calls are not dependent on each other and could happen simultaneously.

For example, considering the first 9 queries (from the first SELECT to the last UPDATE, inclusively):

- The information from the

SELECTqueries is only used by theINSERTcalls. They could thus be ran in parallel with theDELETEqueries. - At the DB level, there are no Foreign Key constraints declared. This means I could run all the

DELETEqueries in parallel (and the same goes for theINSERTcalls).

The question is: what might be the reasons why I should avoid going down this route to optimize the performance of the endpoint by using @Async?

I would use CompletableFuture as described in Spring's @Async documentation.

For example, potential pitfalls I have in mind:

- Thread management that might end up leading to poorer performance when the service is under stress load.

EntityManagernot being thread-safe.- Exception handling.

The service expects a peak of 3000 requests/second, but this specific endpoint will only be called once every few minutes.