I calibrated a camera according to:

ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(objpointslist, imgpointslist, imsize, None, None)

which resulted in:

rvecs = array([[ 0.01375037],

[-3.03114683],

[-0.01097119]])

tvecs = array([[ 0.16742439],

[-0.33141961],

[13.50338875]])

I calculated the Rotation matrix according to:

R = cv2.Rodrigues(rvecs)[0]

R = array([[-0.99387165, -0.00864604, -0.11020157],

[-0.00944355, 0.99993285, 0.00671693],

[ 0.1101361 , 0.00771646, -0.99388656]])

and created an Rt matrix resulting in:

Rt = array([[-0.99387165, -0.00864604, -0.11020157, 0.16742439],

[-0.00944355, 0.99993285, 0.00671693, -0.33141961],

[ 0.1101361 , 0.00771646, -0.99388656, 13.50338875],

[ 0. , 0. , 0. , 1. ]])

Now when I try to get the position of a realworld coordinate [0, 0.4495, 0] in the image according to:

realworldpoint = array([0. , 0.4495, 0. , 1. ], dtype=float32)

imagepoint = np.dot(Rt, realworldpoint)

I get:

array([ 0.16353799, 0.1180502 , 13.5068573 , 1. ])

Instead of my expected [1308, 965] position in the image:

array([1308, 965, 0, 1])

I am doubting about the integrity of the rotation matrix and the translation vector outputs in the calibrate camera function, but maybe I am missing something? I double checked the inputs for the OpenCV's calibrate camera function (objpointslist: 3d coordinates of the center of the April tag Aruco markers, and the imgpointslist: 2d positions of the center of the markers in the image), but these were all correct...

Could one of you help me out?

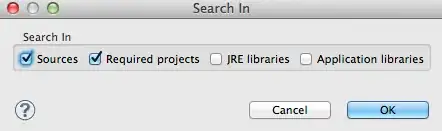

I used this procedure according to OpenCV's calibration procedure:

EDIT (2022/02/03): I was able to solve it! Steps for solution:

- Solve cv2.calibrateCamera() in order to get camera intrinsics (camera matrix) and extrinsics (rotation and translation vectors)

- Calculate rotation matrix from rotation vector according to cv2.Rodrigues()

- Create the Rt matrix

- Use a known point (uv1, and its XwYwZw1 are known, 2D and 3D) to calculate the Scaling factor (s).

NOTE: In order to get the right scaling factor, you have to divide the final equation thus that you get [u v 1], so divide so that the 3rd element becomes a one, this results in your scaling factor:

Now that the scaling factor is known the same equation can be used to calculate an XYZ coordinate for a random point in the image (uv1) according to:

The crucial step in this solution was to calculate the scaling factor by dividing by the third element that you obtain in the [uv1] matrix. This makes that matrix actually [uv1].

The next step was to implement a solution to the lens distortion. This was easily done by using cv2.undistortPoints on the distorted uv/xy point in the image before feeding the u and v to the above equation to find the XYZ coordinate of that point.