I'm attempting to create a std::vector<std::set<int>> with one set for each NUMA-node, containing the thread-ids obtained using omp_get_thread_num().

Idea:

- Create data which is larger than L3 cache,

- set first touch using thread 0,

- perform multiple experiments to determine the minimum access time of each thread,

- extract the threads into nodes based on sorted access times and information about the topology.

Code: (Intel compiler, OpenMP)

// create data which will be shared by multiple threads

const auto part_size = std::size_t{50 * 1024 * 1024 / sizeof(double)}; // 50 MB

const auto size = 2 * part_size;

auto container = std::unique_ptr<double>(new double[size]);

// open a parallel section

auto thread_count = 0;

auto thread_id_min_duration = std::multimap<double, int>{};

#ifdef DECIDE_THREAD_COUNT

#pragma omp parallel num_threads(std::thread::hardware_concurrency())

#else

#pragma omp parallel

#endif

{

// perform first touch using thread 0

const auto thread_id = omp_get_thread_num();

if (thread_id == 0)

{

thread_count = omp_get_num_threads();

for (auto index = std::size_t{}; index < size; ++index)

{

container.get()[index] = static_cast<double>(std::rand() % 10 + 1);

}

}

#pragma omp barrier

// access the data using all threads individually

#pragma omp for schedule(static, 1)

for (auto thread_counter = std::size_t{}; thread_counter < thread_count; ++thread_counter)

{

// calculate the minimum access time of this thread

auto this_thread_min_duration = std::numeric_limits<double>::max();

for (auto experiment_counter = std::size_t{}; experiment_counter < 250; ++experiment_counter)

{

const auto* data = experiment_counter % 2 == 0 ? container.get() : container.get() + part_size;

const auto start_timestamp = omp_get_wtime();

for (auto index = std::size_t{}; index < part_size; ++index)

{

static volatile auto exceedingly_interesting_value_wink_wink = data[index];

}

const auto end_timestamp = omp_get_wtime();

const auto duration = end_timestamp - start_timestamp;

if (duration < this_thread_min_duration)

{

this_thread_min_duration = duration;

}

}

#pragma omp critical

{

thread_id_min_duration.insert(std::make_pair(this_thread_min_duration, thread_id));

}

}

} // #pragma omp parallel

Not shown here is code which outputs the minimum access times sorted into the multimap.

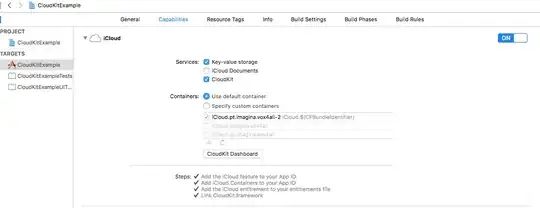

Env. and Output

- How do

OMP_PLACESandOMP_PROC_BINDwork?

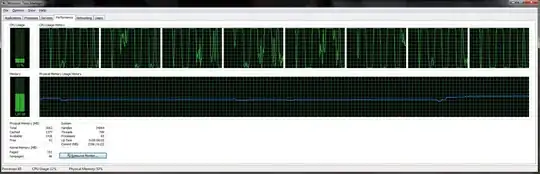

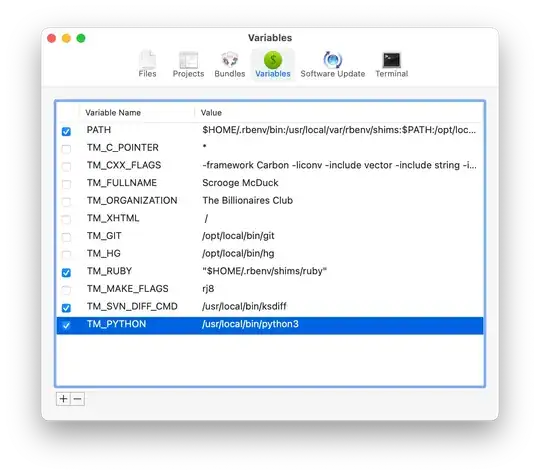

I am attempting to not use SMT by using export OMP_PLACES=cores OMP_PROC_BIND=spread OMP_NUM_THREADS=24. However, I'm getting this output:

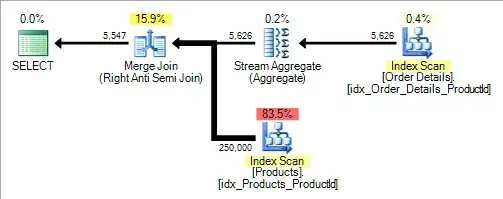

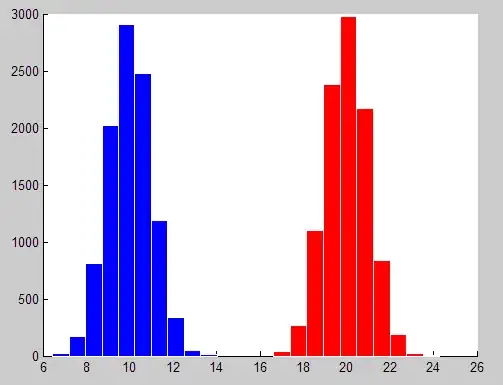

What's puzzling me is that I'm having the same access times on all threads. Since I'm trying to spread them across the 2 NUMA nodes, I expect to neatly see 12 threads with access time, say, x and another 12 with access time ~2x.

- Why is the above happening?

Additional Information

Even more puzzling are the following environments and their outputs:

export OMP_PLACES=cores OMP_PROC_BIND=spread OMP_NUM_THREADS=26

export OMP_PLACES=cores OMP_PROC_BIND=spread OMP_NUM_THREADS=48

Any help in understanding this phenomenon would be much appreciated.