After many tests and searches, I did not get the result and I hope you can guide me.

My codes are available at this GitHub address.

Due to the complexity of the main codes, I have written a simple code example with the same problem and linked it at the above address.

I have a worker that contains four app.tasks with the following names:

- app_1000

- app_1002

- app_1004

- app_1006

And each of the app.tasks should be executed simultaneously only once, that is, for example, app_1000 should not be executed two or three times at the same time and should be executed only once at a time, and if the current task of app_1000 is finished, it can go to Go to the next job.

Celery config:

broker_url='amqp://guest@localhost//'

result_backend='rpc://'

include=['celery_app.tasks']

worker_prefetch_multiplier = 1

task_routes={

'celery_app.tasks.app_1000':{'queue':'q_app'},

'celery_app.tasks.app_1002':{'queue':'q_app'},

'celery_app.tasks.app_1004':{'queue':'q_app'},

'celery_app.tasks.app_1006':{'queue':'q_app'},

'celery_app.tasks.app_timeout':{'queue':'q_timeout'},

}

As you can see, worker_prefetch_multiplier = 1 is in the above configuration.

I use fastapi to send the request and the sample request is as follows (to simplify the question, I only send the number of tasks that must be executed by this worker through fastapi)

I also use the flower script to check the tasks.

After pressing the Send button in Postman, all these 20 hypothetical tasks are sent to the Worker, and at first everything is fine because each app.tasks has started a task.

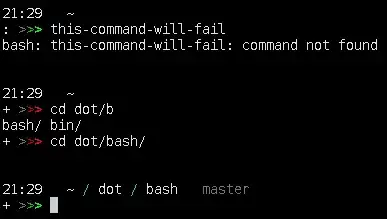

But a few minutes later, when things go forward, the app.tasks are executed simultaneously, that is, for example, according to the photo, app_1000 has been started twice, or in the next photo, app_1006 has been started twice and they are running simultaneously, and I do not intend to do this. case to occur.

A few moments later:

I expect app_1000 or app_1006 to do only one thing at a time, but I don't know how to do it.

Important note: Please do not suggest creating 4 queues for 4 app.tasks because in my real project I have more than 100 app.tasks and it is very difficult to manage all these queues.

A question may arise, why, for example, app_1000 should not be executed simultaneously? The answer to this question is very complicated and we have to explain the main codes which are too many, so please skip this question.

The codes are in GitHub (the volume of the codes is small and will not take much of your time)

And if you want to run it, you can enter the following commands:

celery -A celery_app worker -Q q_app --loglevel=INFO --concurrency=4 -n worker@%h

celery flower --port=5566

uvivorn api:app --reload

thank you