I'm implementing a program that needs to serialize and deserialize large objects, so I was making some tests with pickle, cPickle and marshal modules to choose the best module. Along the way I found something very interesting:

I'm using dumps and then loads (for each module) on a list of dicts, tuples, ints, float and strings.

This is the output of my benchmark:

DUMPING a list of length 7340032

----------------------------------------------------------------------

pickle => 14.675 seconds

length of pickle serialized string: 31457430

cPickle => 2.619 seconds

length of cPickle serialized string: 31457457

marshal => 0.991 seconds

length of marshal serialized string: 117440540

LOADING a list of length: 7340032

----------------------------------------------------------------------

pickle => 13.768 seconds

(same length?) 7340032 == 7340032

cPickle => 2.038 seconds

(same length?) 7340032 == 7340032

marshal => 6.378 seconds

(same length?) 7340032 == 7340032

So, from these results we can see that marshal was extremely fast in the dumping part of the benchmark:

14.8x times faster than

pickleand 2.6x times faster thancPickle.

But, for my big surprise, marshal was by far slower than cPickle in the loading part:

2.2x times faster than

pickle, but 3.1x times slower thancPickle.

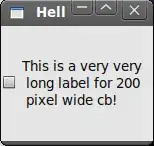

And as for RAM, marshal performance while loading was also very inefficient:

I'm guessing the reason why loading with marshal is so slow is somehow related with the length of the its serialized string (much longer than pickle and cPickle).

- Why

marshaldumps faster and loads slower? - Why

marshalserialized string is so long? - Why

marshal's loading is so inefficient in RAM? - Is there a way to improve

marshal's loading performance? - Is there a way to merge

marshalfast dumping withcPicklefast loading?