I have a multiply-add kernel inside my application and I want to increase its performance.

I use an Intel Core i7-960 (3.2 GHz clock) and have already manually implemented the kernel using SSE intrinsics as follows:

for(int i=0; i<iterations; i+=4) {

y1 = _mm_set_ss(output[i]);

y2 = _mm_set_ss(output[i+1]);

y3 = _mm_set_ss(output[i+2]);

y4 = _mm_set_ss(output[i+3]);

for(k=0; k<ksize; k++){

for(l=0; l<ksize; l++){

w = _mm_set_ss(weight[i+k+l]);

x1 = _mm_set_ss(input[i+k+l]);

y1 = _mm_add_ss(y1,_mm_mul_ss(w,x1));

…

x4 = _mm_set_ss(input[i+k+l+3]);

y4 = _mm_add_ss(y4,_mm_mul_ss(w,x4));

}

}

_mm_store_ss(&output[i],y1);

_mm_store_ss(&output[i+1],y2);

_mm_store_ss(&output[i+2],y3);

_mm_store_ss(&output[i+3],y4);

}

I know I can use packed fp vectors to increase the performance and I already did so succesfully, but I want to know why the single scalar code isn't able to meet the processor's peak performance.

The performance of this kernel on my machine is ~1.6 FP operations per cycle, while the maximum would be 2 FP operations per cycle (since FP add + FP mul can be executed in parallel).

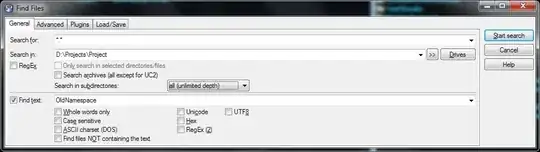

If I'm correct from studying the generated assembly code, the ideal schedule would look like follows, where the mov instruction takes 3 cycles, the switch latency from the load domain to the FP domain for the dependent instructions takes 2 cycles, the FP multiply takes 4 cycles and the FP add takes 3 cycles. (Note that the dependence from the multiply -> add doesn't incur any switch latency because the operations belong to the same domain).

According to the measured performance (~80% of the maximum theoretical performance) there is an overhead of ~3 instructions per 8 cycles.

I am trying to either:

- get rid of this overhead, or

- explain where it comes from

Of course there is the problem with cache misses & data misalignment which can increase the latency of the move instructions, but are there any other factors that could play a role here? Like register read stalls or something?

I hope my problem is clear, thanks in advance for your responses!

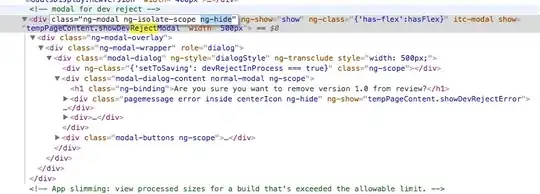

Update: The assembly of the inner-loop looks as follows:

...

Block 21:

movssl (%rsi,%rdi,4), %xmm4

movssl (%rcx,%rdi,4), %xmm0

movssl 0x4(%rcx,%rdi,4), %xmm1

movssl 0x8(%rcx,%rdi,4), %xmm2

movssl 0xc(%rcx,%rdi,4), %xmm3

inc %rdi

mulss %xmm4, %xmm0

cmp $0x32, %rdi

mulss %xmm4, %xmm1

mulss %xmm4, %xmm2

mulss %xmm3, %xmm4

addss %xmm0, %xmm5

addss %xmm1, %xmm6

addss %xmm2, %xmm7

addss %xmm4, %xmm8

jl 0x401b52 <Block 21>

...