No and Yes.

TL;DR: TRIM is a command that is designed to inform the drive about LBA ranges it can set aside for garbage collection. While writing zeros can have this effect depending on how the firmware treats zero entropy data, in a worst case scenario it actually allocates entire LBA space leaving just over-provisioned space as wiggle room for the firmware, potentially increasing write amplification and thus wear - the exact opposite of what you're trying to accomplish.

Simple answer: No. TRIM is a command that in general causes a SSD drive to unmap LBA from PBA addresses. If such an unmapped sector is read the controller returns zeros without even reading the drive. Unmapped or stale sectors become available for the garbage collector that can then consolidate NAND blocks it can erase, after which space is available for the drive to write to.

Writing zeros is writing data and so writing zeros from LBA(min) to LBA(max) causes the entire LBA space to be mapped. Or at least this was how this worked and still works on older and perhaps cheaper, lower spec drives.

So while effect is or better appears the same when we read from the drive as reading from a zero-filled drive, it is not actually the same.

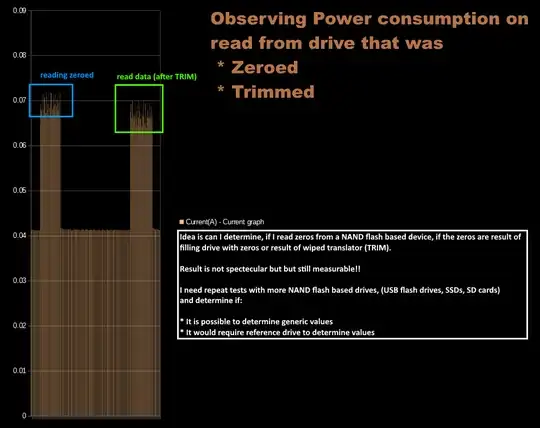

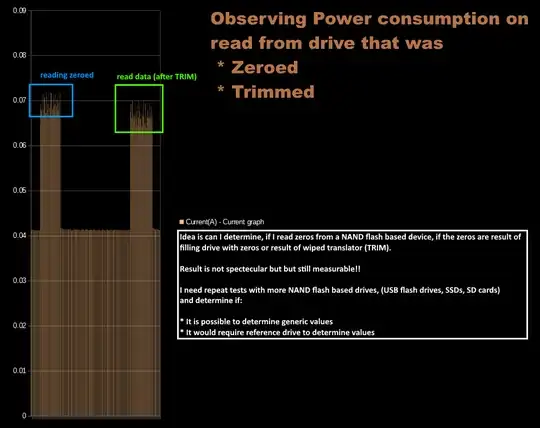

I have done experiments that show a difference in power consumption between reading from a zeroed drive vs. a 'trimmed' drive: For reading actual zeros from the NAND the drive has to do actual work, provide power to charge-pumps to achieve required voltages to read the NAND cells.

HOWEVER!

As others suggested the SSD firmware may be clever enough to detect you're writing zeros and so may refrain from actually storing the zeros and instead make a 'note' in it's mapping tables that some LBA sector is having zeros written to it, so when the time comes you read the LBA sector the controller simply returns zero filled sectors.

Even if the controller does not apply above method, it may be compressing data (example). And so for writing zeros to entire LBA space the controller may only have to allocate a fraction of NAND real-estate to store the data.

The smart firmware makes sense and controllers with a dedicated compression unit too, as if the manufacturer can limit the amount of data written to a drive, it will live longer.

The effect of writing zeros to the drive will have a similar effect as TRIM if zeros are written to LBA space that previously contained data: Controller detects the zeros or can compress that to virtually nothing, which means the LBA space containing the original data can be unmapped (and made available to garbage collector) while it virtually has to assign no physical space to storing the zeros.

To further support this idea, a colleague of mine was recently researching the translation algorithm of a modern SSD and for this he wrote a pattern to the drive (0x77 bytes). He noticed the SSD was not actually writing by observing power consumption: To write data the voltage in the NAND needs to be increased, 'pumped up' even more than when reading. So these controllers appear to detect any low entropy data (not just zeros) and for example keep a table with 'place holder data' for LBAs that have zero entropy data written to them.

So the longer answer is that it depends on the drive's controller and firmware. The more modern the drive the better the chance the firmware will detect zeros/compress and so effect will be similar to TRIM.

But you can not just assume a drive will work like this and also you could argue if it does, it's just a side effect of foe example on-the-fly compression done by the controller.

Difference is that TRIM is quicker as no actual data transport from host to drive is required. And another difference is that TRIM was sort of designed for what you want to do and probably more predictable in behavior.