I'm trying to use Lubuntu to recover as much data as possible from a failing 4TB hard disk drive.

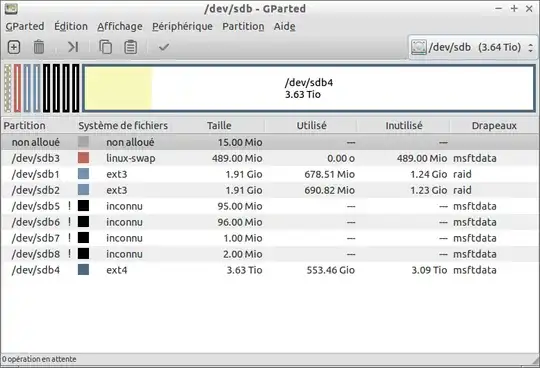

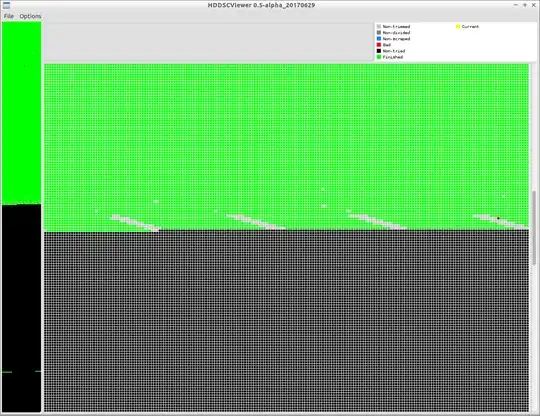

According to GParted the main partition, formatted in Ext4, contains only 553GB of data. I attempted to make a full clone with HDDSuperClone, a GUI software for Linux, similar to the command line tool ddrescue in purpose and functionality. At first it was working well, with few errors / skipped sectors and a good average copy rate (~60MB/s). But about midway through it started to have more severe issues, with some areas not being read at all, forming a pattern in alternating stripes of good reads and bad reads, which is typically indicative that one head is defective. At that point I stopped the recovery.

I had recovered about 1.7TB, and it had been copying only 00s for quite a while, so I thought that all the relevant data would be secured on the recovery drive already. But it turns out that the main partition can not be mounted (while it can still be mounted on the source drive, albeit with difficulty), and reputed data recovery software (R-Studio, DMDE) can not reconstruct the original directory structure or retrieve original file names. And when opening the recovery drive in WinHex I can see that it is totally empty beyond 438GB, which would mean that about 115GB are missing – although I don't understand how that would be possible, as filesystems are supposed to write data on the outermost areas available, where the reading / writing speed is better, to optimize the performance of HDDs.

Now, to get the most out of what's left, considering that the drive's condition might deteriorate quickly at the next serious recovery attempt, I'm looking for any method that could analyse the metadata structures and report the allocated / unallocated space, so that I could target the recovery to those relevant areas instead of wasting precious time reading gigabytes of zeroes. A little command line program developed some years ago by the author of HDDSuperClone, ddru_ntfsbitmap (part of ddr_utility), can do this automatically with NTFS partitions: it analyses the $Bitmap file and generates a “mapfile” for ddrescue which effectively restricts the copy to the sectors marked as allocated (provided that this system file can be read in its entirety); it can also generate a “mapfile” to recover the $MFT first, which is tremendously useful (the MFT contains all the files' metadata and directory structure information, if it's corrupted or lost, only “raw file carving” type of recovery is possible). But even this highly competent individual doesn't know how to do the same with Linux partitions, as he replied on this HDDGuru thread.

So, even if it's not fully automated, I would need a procedure that could analyse an Ext4 partition, quickly and efficiently so as to not wear the drive further in the process, and report that information either as a text log or as a graphic presentation. Perhaps a defragmentation program would do the trick?

And generally speaking, where are the important metadata structures (“inodes” if I'm not mistaken) located on a Linux partition? Is there a single file equivalent to NTFS $Bitmap, or is the information about file / sector allocation determined through a more complex analysis? (If that's relevant, the drive was in a WDMyCloud network enclosure, factory configured and running with a Linux operating system.)