I’ve got a Synology NAS that I’m trying to reduce a RAID6 array from 12 drives down to 11 drives.

I’ve already reduced the size of the file system and logical volume, but when I try to reduce the size of the RAID array to 11 drives, mdadm just gives me an error.

root@DiskStation:~# mdadm -V

mdadm - v3.4 - 28th January 2016

root@DiskStation:~# mdadm -D /dev/md2

/dev/md2:

Version : 1.2

Creation Time : Sat Jul 12 13:24:29 2014

Raid Level : raid6

Array Size : 19487796480 (18585.01 GiB 19955.50 GB)

Used Dev Size : 1948779648 (1858.50 GiB 1995.55 GB)

Raid Devices : 12

Total Devices : 12

Persistence : Superblock is persistent

Update Time : Tue Feb 4 10:14:55 2020

State : clean

Active Devices : 12

Working Devices : 12

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 64K

Name : DiskStation:2 (local to host DiskStation)

UUID : 263bdad7:eed15299:ff0c7a70:c97ee595

Events : 414186

Number Major Minor RaidDevice State

15 8 5 0 active sync /dev/sda5

1 8 21 1 active sync /dev/sdb5

8 8 37 2 active sync /dev/sdc5

9 8 53 3 active sync /dev/sdd5

4 8 69 4 active sync /dev/sde5

7 8 117 5 active sync /dev/sdh5

6 8 101 6 active sync /dev/sdg5

5 8 85 7 active sync /dev/sdf5

11 8 133 8 active sync /dev/sdi5

12 8 149 9 active sync /dev/sdj5

13 8 165 10 active sync /dev/sdk5

14 8 181 11 active sync /dev/sdl5

root@DiskStation:~# mdadm --grow -n11 /dev/md2

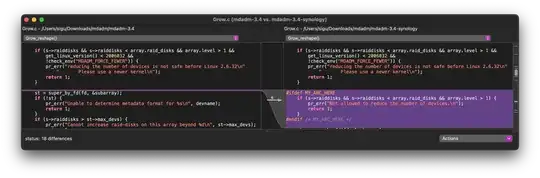

mdadm: Not allowed to reduce the number of devices.

I have very little experience with this sort of thing, and the fact that I can’t find anything online regarding that particular error has left me at a dead end.

I’ve been following these instructions here, and they say I should be prompted to reduce the array size. Do I just need to go ahead and reduce the array size anyway without being prompted? How do I know what size to make it?