A pure speculation, based on personal experience with similar software (I avoid using RST for a lot of reasons):

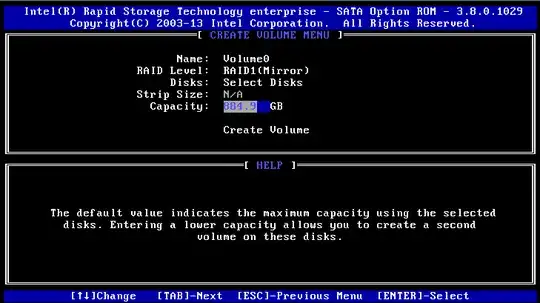

931.5 * 1000 * 1000 / 1024 / 1024 = 888.35 + some rounding errors

Looks like the old 1000 vs 1024 dualism in hard disk volume labels.

The usual IT thinks that 1k = 1024 and 1M = 1048576 (1024 * 1024). 1024 is a good binary number, it looks like 1000000000 in binary and is handy for IT calculations.

Disk manufacturers prefer 1k = 1000 and 1M = 1000000 (exactly like the case is for SI units). This gives bigger numbers on the label and bigger numbers sell.

When one wants to be sure to imply 1024 multipliers, Ki, Mi and Gi abbreviations shoild be used (usually pronounced kibi-, mibi- and gibi-).

https://en.wikipedia.org/wiki/Byte#Multiple-byte_units

In your particular case:

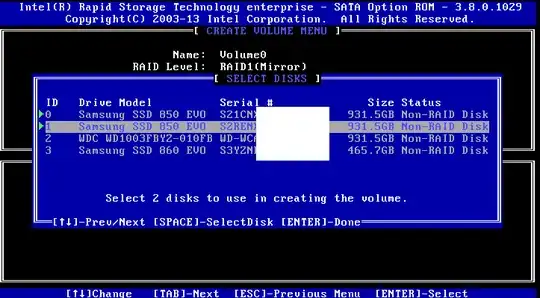

The disks are advertized as 931GB.

The "SELECT DISKS" menu shows the size in manufacturer units for the sake of correspondence between the label and the number on the screen.

The "CREATE VOLUME" menu shows "IT units", because... whatever the designer of this software package imagined.

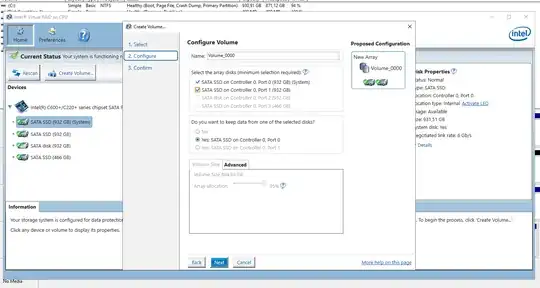

The real overhead of the RAID 1 volume (spare for the half used for redundancy) is like 512 or 1024 (or probably even 4096 for the sake of the advanced format) bytes and is completely negligible (and the numbers above are not accurate enough to show a difference that small anyway).