TLDR:

- Encrypted Luks drive will unlock on boot sequence, but then fails to boot.

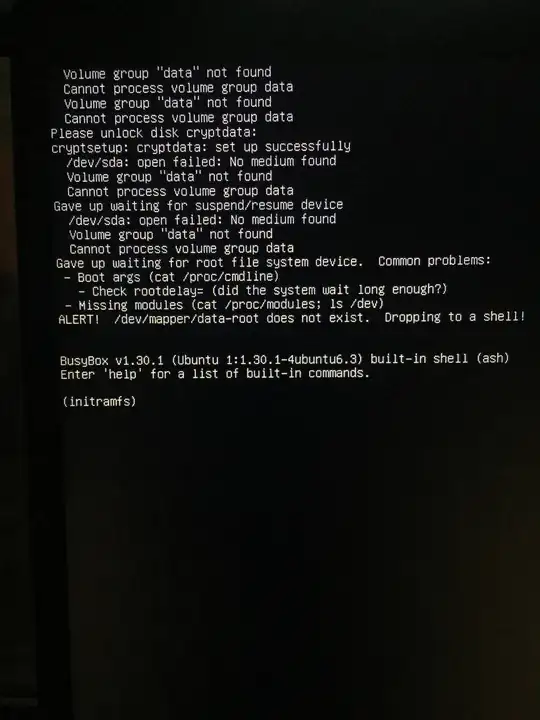

- Drops to (intiramsfs) on boot.

- Logical volumes messed up. Not finding data-root (boot drive)

- was using Gparted and Disks to partition, format external drives prior to boot issue.

- was installing Qubes on USB drive prior to boot issue.

- Somewhere between partioning, formatting, and booting to Qubes install, logical volumes got screwed up.

Background:

I was formatting, partioning external hard drives using Gparted and Disks. I had to repeat several times for the same drive, because I had messed up the scheme I wanted.

My Disks sidebar showed a host of drives that no longer exist. (Suspect the mapper got messed up during the partioning.)

After copying Qubes ISO to usb, and trying to boot to it, it showed corrupted.

I rebooted to my primary drive (ElementaryOS)... and thats when the boot issue showed.

Boot error:

Here is the error:

/dev/sda: open failed: No medium found

dev/mapper/data-root does not exist.

I tried:

Booting to a USB ElementaryOS, and accessing the primary drive (to see if I could fix the mapper).

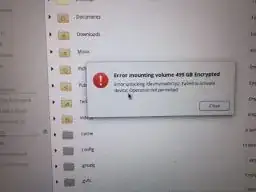

Using Files to unlock the primary encrypted drive, I get the error:

Error unlocking /dev/invme0n1p2:Failed to activate device: Operation not permitted

After the error the drive disappears from the Files devices.

Cloned the drive, to a USB, and tried mounting the encrypted clone. Same error on the clone.

What I suspect:

The mapper is screwed up. When the drive tries to open, it decrypts, but the mapping is screwed up.

Question:

Do you think it is indeed a mapper problem? Or something else? How would I fix the mapper in this situation?

If I can't fix the mapper, how would I access the data on the encrypted drive, and copy it to another backup?

UPDATE:

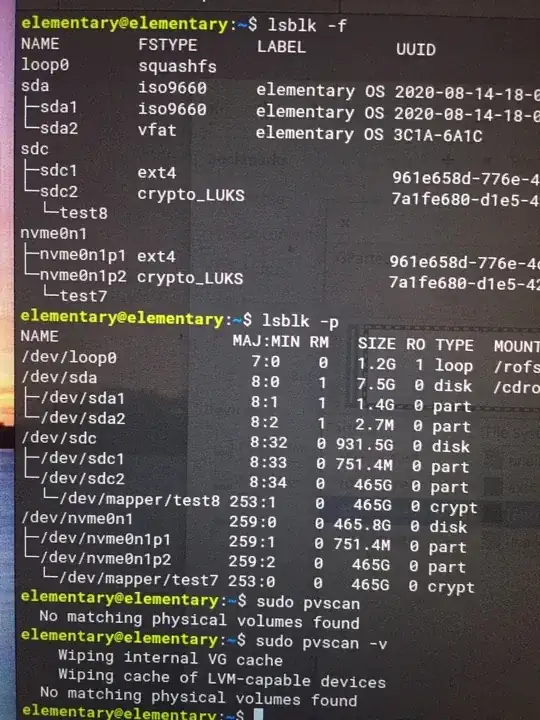

Thanks to user1686 I've been able to determine it seems that the problem has to do with no logical volumes once Luks decrypts the device.

I cloned the drive to an external HD. I mounted primary drive (nvme0n1p2) using cryptosetup luksOpen in terminal and test7 as the mapper param. It decrypts.

I ran pvscan, and get "No matching volumes found".

I repeated for the cloned drive, using test8. Same result.

So, if I'm able to decrypt, and then there are no logical volumes inside, how would I access the data?

(At this point, I just want to get the data, and then reinstall a fresh system.)

Here's what I'm seeing in terminal: