I have been boy and man handling computer data for 59 1/2 years and for the past 40 resolving lost data problems at various levels from dodgy switches and relays, torn paper tape and moth eaten cards, stretched tapes and cables, bent or crazed disks and flakey chips. Some amazing stories, I can not tell, or you would doubt my sanity or the people who employed me or those who infected their data.

So the first advice is cull the cause, even if that is the hackneyed "Did you switch off at the wall?"

The next step is assess the chance of recovery versus the cost of do it again.

So this was an interesting challenge and the answers are not good.

If you think there is a chance the editing device has a hidden deleted copy and the cost of replacement is exceptionally high. Then it may be worth paying for the powered down device to be forensically hooked into a diagnostic system where the disk can be mirrored and scanned for deleted %PDF- headers.

Modern disks tend to either not make that possible (solid state) NOR as easy as it was, by reuse the released space rapidly for large memory storage cache, thus over-write the lost data.

Now to the hub of the "Questionable" saved file.

It has retained much of the desired data. HOWEVER by comparison with the unedited source file we can say the loss was highly significant.

The source PDF had already been edited twice, (one new cover ? & one minor tweak) so had residual oddities (not unusual but to be avoided) for adding different edits.

core /Size 39679 objects

edit /Size 39692

edit /Size 39694

If I restructure that source file the working count is optimised as /Size 37546 objects. Indicating there was some redundancy, but again not unusual.

The additions over 2 months should at more than a few a day be increasing the count up towards 40,000 or more. However it reports it was /Size 70957. Confirming at one time the file should have been excessively large. so the extra approx 32,000 items need to be all in the retained file but it is comparatively smaller than required.

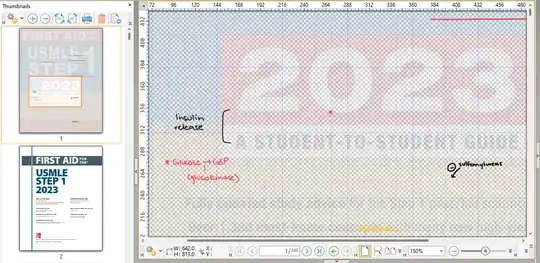

As a test (for my comparison) I recovered just one page of annotations (without knowing which number page it covered. It may not be typical, but amounts to about 120 KB for one page.

It may not make sense here as you cannot see the components as here out of context but is the last page of changes (see the date) presumably on a Right Hand page.

We can place it over that new cover page (still not the correct unknown deleted page)

In summary my gut feeling, is that the slow cost of recovery, and low number of retained objects (count of /Annots = approx 57 (pages ?), suggests recovery is more expensive than the labour to "do it again".

Tantalisingly there is a good group from 67961 to 70957 thus those should be recoverable.

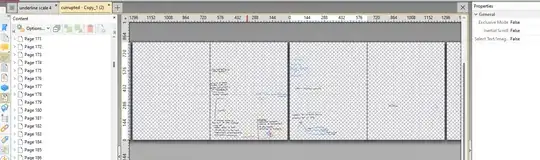

The best recovery application I found https://superuser.com/a/1808687/1769247. Is only showing objects from a nominal page number of 180 upwards to 240 from the total 849 and actually reproduces over twice as many additional image pages, since in pictorial terms some will be negatives of the soft masking so 850-1845 are pieces that may be sub image duplicates of the 180-240 or may be bits of others ?.

Here is a 30 day link to the fixed remaining parts https://filetransfer.io/data-package/nbXvfSBp#link

Recommendations for going forward

Split the master file into 4 convenient parts, This has a 3 fold benefit.

- Each part will be faster to render and respond to heavy annotation.

- Fix any foundation problems in the source file.

- Reduce future catastrophic loss to only 25% at a time.

Reconsider the annotation software's ability to work with the massive amounts of memory required and potentials for a "brownout" loss, where any temporary glitch can destroy an open editing file.

Work on a reliable local disk system like a workstation and never a synced cloud drive.

Dont use the repaired file itself, simply use that as a heads up for repeated task. May include cut and paste objects in a PDF GUI editor that should avoid any carry over of other faults.

Case specific possibility.

You may find the page numbers are off sync but correct order, or with luck the perfect order for transfer into the Master file. If that is the case then there are command line tools that "might" speed up transfer by export /Annots from recovery file as say JSON and then allow import into a suitably optimised master file by page numbers. One such tool may be coherent cpdf as it has an optimiser tool and /Annots export import. but I cannot say if it will answer this issue well enough.