I'm trying to save a Reddit page for OFFLINE viewing as a single HTML file, EXACTLY as it's displayed on the browser and after having already manually expanded some comment threads. This issue is a subset of the general question of how one can save the entire web DOM in its current state while preserving the CSS effects and layout. For example, here are a multitude of posts across the Stack Exchange platform that ask this general question:

- How can I dump the entire Web DOM in its current state in Chrome?

- Is it possible to dump the DOM with JavaScript and preserve CSS effects and layout?

- How to save a webpage at its current state with images on Chrome?

- Save the current webpage in a single html file format

- Can I capture and save the current state of a webpage using javascript

- How to show/save the HTML including pictures as currently shown by the web browser?

- How to get a perfect local copy of a web page?

- How to save a web app to static HTML?

- Save website containing javascript after it was interpreted

- How do I save a webpage without triggering a reload or re-executing JS?

- How do I completely download a web page, while preserving its functionality? [duplicate]

Almost all answers are of one of the following forms:

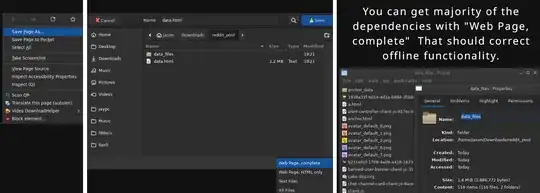

Right click and select

Save as...and then save as eitherWeb Page, Complete (*.htm;*.html)orWeb page, Single File (*.mhtml).Open Chrome DevTools and copy the entire HTML (

Copy outerHTML) from theElementstab.You'll never be able to save a file that looks exactly as the live website version due to many links being "relative" links, and many links to external scripts can be contained inside CSS and JS files.

Use a tool such as HTTrack. (As far as I know, however, HTTrack doesn't support saving everything in a single HTML file.)

Saving a webpage as a single HTML file exactly as it appears to the user during a live render is simply impossible for many websites.

Use a browser extension, such as "Single File" (the developer's GitHub page is here), "Save Page WE", or "WebScrapBook".

Try the "WebRecorder" Chrome extension.

Several of these answers do actually achieve some level of saving the webpage's layout as a single HTML file exactly as it appears when rendered live, but there is a HUGE downside: they do not save the HTML file in such a way that makes it possible for the user to view the page OFFLINE. The offline viewing part is essentially what I'm after, and is the crux of my issue.

For example, opening Chrome DevTools and saving the entire outerHTML from the Elements tab does actually allow the user to save the page exactly how it looks like when rendered live, but as soon as the user tries opening the HTML file in offline mode, none of the external scripts are able to load, and thus the entire comment section of the Reddit page literally doesn't even display. I did some manual inspection of the HTML file itself, and I found out that the comments themselves are actually present in the HTML file, but they just don't render when the user loads the file, since they depend on external scripts to dictate how to display to the user.

A solution (almost...)

In my experience, I have found that using the SingleFile chrome extension does exactly the task that I'm after (almost), and it does it best. It's able to save the page precisely as it looks like to the user during a live render (even when viewed offline), and I've found that it's better than both the "Save Page WE" and the "WebScrapBook" extensions. SingleFile handles many sites flawlessly, but it fails miserably when attempting to save a Reddit page that has a huge comment thread. In such cases, the extension consumes too much memory and simply crashes the tab (an Out of Memory error occurs). The sad part is that the extension works well on Reddit posts that have a very small comment section, but rather mockingly, most of the time when I want to save a Reddit post, the Reddit post has a very large comment section, and thus the SingleFile extension can't handle it.

The SingleFile developer has a command-line variant of the tool on his GitHub page, but that simply just launches a headless browser and downloads the requested URL. This approach is useless in my case since I want to save the Reddit page with the modifications that I've personally and manually made (i.e., with the desired comment threads manually expanded). Moreover, I've had the same Out of Memory issue with this approach.

Dirty workaround

I've found that a super dirty workaround to my issue is to simply save the page in PDF format, but I don't want a PDF format. I want an HTML format.

Any ideas on how to save a Reddit page for offline viewing, even in instances wherein the comment section is rather large?