I'm using an AMD 7800X3D CPU and an NVIDIA RTX 3900 video card. Windows correctly identifies them as being the "power saving" and "high performance" graphics adapters, respectively. I can configure some applications to utilize a specific one of the two.

The reasoning

I use Edge as my browser, and rarely, when viewing a video, my NVIDIA driver will crash. Windows reports a TDR (timeout, basically) and successfully resets the driver. Therefore, I'd like Edge to use the integrated AMD GPU, in the hope of avoiding these crashes.

The problem

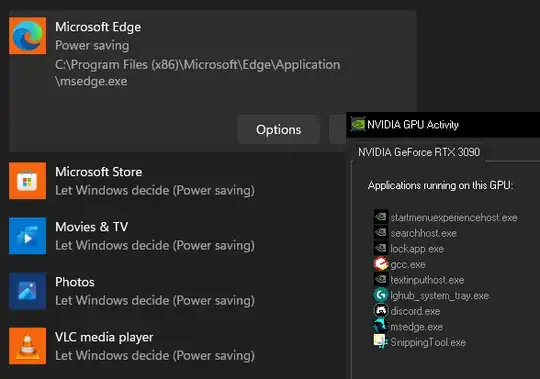

To the best of my ability, I have configured Windows to run Edge using the integrated GPU, and if I view the about://gpu page within the browser, it appears to think it's using that one indeed because it lists details of that one and AMD-specific bug mitigations that are active. However, I can still experience the crash, and NVIDIA's "GPU activity" monitor (which can show you which applications are utilizing the NVIDIA GPU) still shows that it's actually utilizing the NVIDIA card in some way. See the picture below.

I also had VLC running at the time of the screenshot, and you can see that it's not listed for nVidia GPU activity.

If I do disable hardware graphics acceleration in Edge, I can avoid the crash, but the browser is a little bit less comfortable to use with software rendering, so I'd like to avoid that option if possible.

I tried the "--use-adapter-luid" command line switch, and while I can correctly have it choose the AMD adapter, it continues to utilize the NVIDIA adapter in some way, according to the GPU activity window.

So, besides turning off graphics acceleration entirely, what can I do to force Edge to stop using the NVIDIA GPU? Failing that, why is it utilizing both GPUs; what's the mechanism at play, here?