I have a 100 Mbit switch connected to three 100 Mbit cameras and 100 Mbit computer. The bandwidth limitation comes from the cables in use and cannot be altered to solve the problem because i am working in an industrial/automotive enviroment.

┌------------ Camera

Computer-----Switch---------- Camera

└------------ Camera

The cameras stream RTP via UDP. All cameras are configured to use constant bitrate with 24 Mbit/s each.

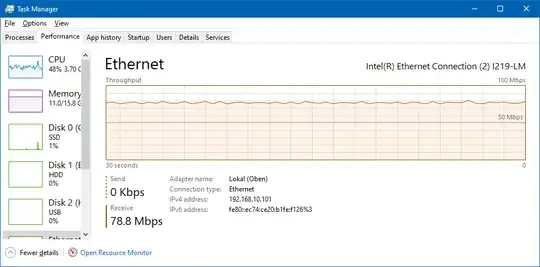

Taskmanager confirms that the expected bandwidth is used:

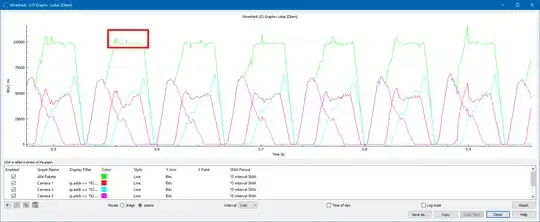

When I run a Wireshark IO analysis I can see that while the average bandwidth is under 100 Mbits, there are spikes exceeding 100 Mbits, causing packet loss, and the stream not to be decodable. These spike come from the cameras which capture an image every 33 ms and send it. Then they stay silent until the next image is captured.

In the red box we can see the 100 Mbit limit is reached and packages are lost. We cant see that here but, RTP stream analysis shows it.

All cameras, and the switch support flow control (https://en.wikipedia.org/wiki/Ethernet_flow_control). I would expect the switch to use flow control to "smoosh" out network traffic, avoiding this problem.

- Is my understanding of flow control wrong?

- Is there a different mechanism that might help?

Altering the transport protocol from RTP to something else is not possible. Switching to Gigabit is not possible.