First some terminology correction:

- A 'Lane' is a single full-duplex path consisting of a single Tx and single Rx pair.

- A 'Link' is a single point-to-point connection between exactly two devices, no more, no less. A link may consist of one or more lanes.

A link is point to point, and therefore it is not possible to split it up and recombine it.

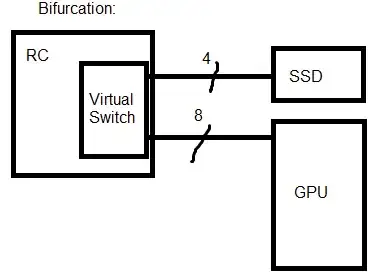

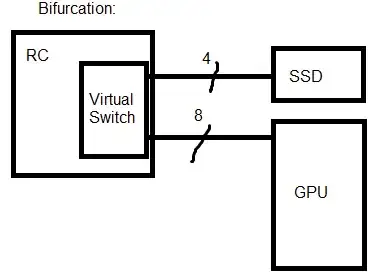

It is possible under certain situations for a device to support "bifurcation" whereby it takes its available lanes and splits them up into multiple links, however this is not a requirement in the specification, nor is it always available. It is a hardware specific feature which involves a device being able to reconfigure itself to be a virtual switch.

It is not possible to take the lanes from multiple links and combine them into a single link (the reverse of bifurcation). This would be called aggregation and there is no defined support for it.

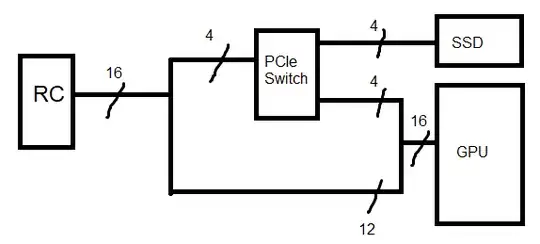

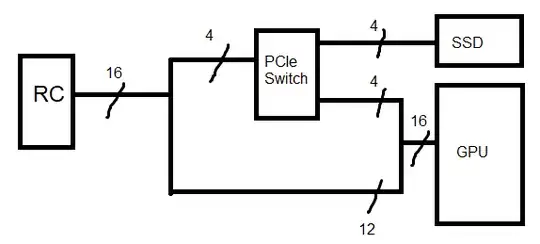

From my understanding of your question, what you are asking is if you can do this:

If that is the case, the simple answer is no you can not. This will not work how you expect if it even works at all.

Wiring things up like this will produce one of the following results depending on which lanes the "4" you split out are:

If the four lanes split off are 0-3 on both the RC and GPU, then either:

- The GPU and SSD will link up as either x1 or x4 devices behind the PCIe switch, and the RC will see the switch on a x1 or x4 link.

- Nothing will be detected because the 12 remaining lanes being connected confuse the upstream port and prevent a link being established.

If the four lanes are any others (e.g. upper four, or random selection), then either:

- The GPU will be detected and link up as a x1, x4, x8, (or highly unlikely x12) device depening on what lane widths both it and the RC support. The SSD and PCIe switch will not be detected.

- Nothing will be detected because the 4 remaining lanes confuse the upstream port because they are connected to some other random device.

If you were able to configure bifurcation on the RC (for example turning the port into a x8,x4,x4 mode), then assuming the PCIe switch is connected to the lanes of one of the x4 links:

- the GPU links up at x1, x4, or x8 directly from the RC, and the SSD appears as a x1 or x4 device behind the PCIe switch.

In any of these cases the four lanes from the PCIe switch to the GPU do nothing at all other than potentially prevent a link forming.

The correct way to wire this up would be either using a PCIe switch or bifurcation alone.

Using a switch with at least 36 lanes, whereby the upstream port connects to the RC with a x16 link, and the switch is configured to have two downstream ports, one for each of the SSD (x4) and GPU (x16).

Using bifurcation alone if supported by your RC. Here you could have a x4 link for the SSD, and a x8 link for the GPU:

Notice how in both cases each link connects to exactly two devices. These are therefore valid links.

Depending on your GPU, you may not be able to get a x8 link, in which case in the second bifurcated case, it will form a x1 link instead. This is because PCIe devices are only required to support a x1 link (needed for establishing contact), and their native link width (e.g. x16). Any partial widths in between such as x4 or x8 on a x16 device are not necessarily supported by the device. For many consumer desktop GPUs, both x8 and x16 are generally supported.