Is there a minimum delay that must be maintained between two consecutive RS232 frames?

No, there is no such requirement (no min and no max) in EIA/RS232C.

The Start bit of the next character can immediately follow the Stop bit of a character.

Note that the line idles at the Marking state, which is the same level as the Stop bit.

It is interesting that you make no mention of the Stop bit in the character frame.

I believe the UART on the computer looses track of the difference between the start bit and a zero. The delay between the two "A" is ~ 30us (measured with a logic analyzer)

You are using the wrong tool for this task! You should be using a 'scope. You cannot analyse a timing problem by viewing a sampled and sanitized rendition of the analog signal.

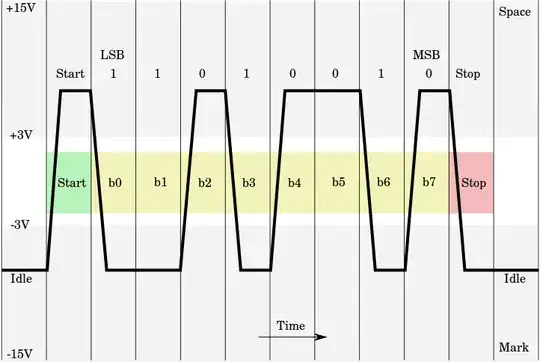

The difference between the Start bit and a zero is timing. The character frames are transmitted at an asynchronous rate. But the bits of the frame have to be clocked at the specified clock rate.

For 115200 baud rate, that would be 8.68usec for 1 bit time. For 8 data bits plus a Start bit and a Stop bit, the frame time is 86.8usec.

You question implies that you have not bothered to look at the EIA/RS232C spec for minimum rise/fall times and when the signal is typically sampled. Interesting method for implementing HW.

Perhaps you should also use a frequency counter to measure the baud rate generator at each end. A mismatch of a few percent can usually be tolerated. A mismatch could produce the symptoms you see.

How come framing errors are not reported by the receiver? Instead of just looking at output, maybe you need to review the stats of the serial port, i.e. /proc/tty/driver/...