My ultimate goal is to get meaningful snapshots from videos that are either 30 min or 1 hour long. "Meaningful" is a bit ambitious, so I have simplified my requirements.

The image should be crisp - not blurry.

Initially, I thought this meant getting a "keyframe". Since there are many keyframes, I decided to choose the keyframe closest to the third minute of the video, which was generally "meaningful" enough for me. I followed the advice at: FFmpeg command to find key frame closest to 3rd minute

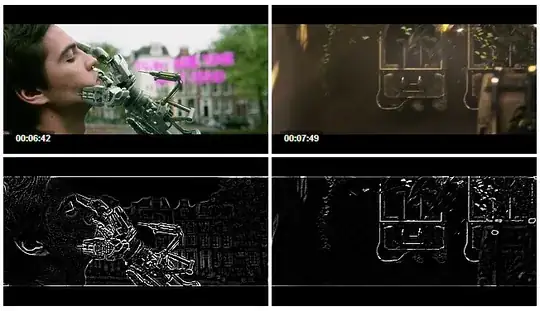

But the problem is that these keyframes are often (not always) blurry. An example is:

I then tried, Meaningful thumbnails for a Video using FFmpeg which did help get more meaningful snapshots, but I still often (not always) got blurry frames like the above.

You will notice that this sort of image is essentially an overlap of 2 different scenes. Sometimes, however, I get images that work for me – like this:

The above image is not very meaningful, but it is crisp.

Ideally, I would like to FFmpeg not to return blurry frames. Alternatively, I would like to use a script to detect blurry frames and select the least blurry from say 5 frames. Does anyone know how to do this?