Not sure if this is the right place to ask such a question, but I don't see a more relevant place on stack exchange otherwise.

So recently I've become kind of obsessed with becoming more efficient with my usage of power when it comes to my computer, and the long term costs of running a computer via your power supply. and have recently been reading about the 80 plus standard.

anyway here is my question. I recently read the only way to really know how many watts your computer is pushing is to buy a kill-a-watt meter, which i did. I Actually bought the Ez version, which show costs including a number of other options.

At idle levels (CPU ~1-5%), my PC pushs ~100 Watts according to this meter. When i stress my computer to around 70-80% cpu levels, it pushs up to ~200 watts. This includes, browsing 3 or 4 heavy cpu driven websites, running a high end game in windowed mode, running a 10gb blu-ray movie and listening to music at the same time.

I'm really trying to understand why on earth people are buying power supply that are 600, 850 or even sometimes 1000+ watt. Can one of you electrical genius's explain this to me?

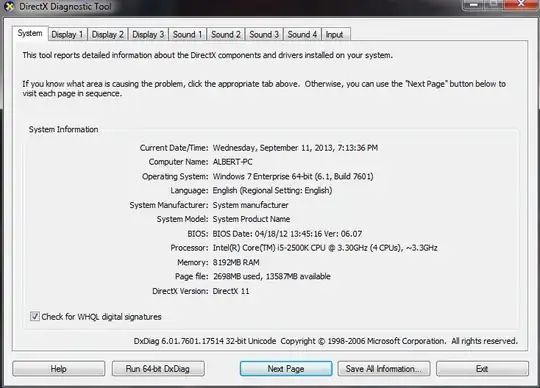

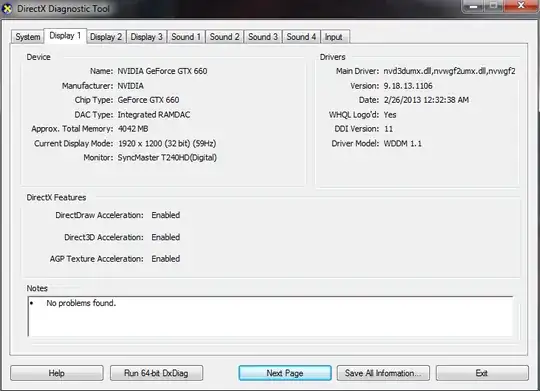

My computer Specs:

Other Specs: 1 - 128 GB SSD, 2 TB Green WD Hard Drive, 1 TB Hard Drive, 1 Optical Mouse, 1 Usb Keyboard, NO CD-Rom, 430 Watt Thermaltake