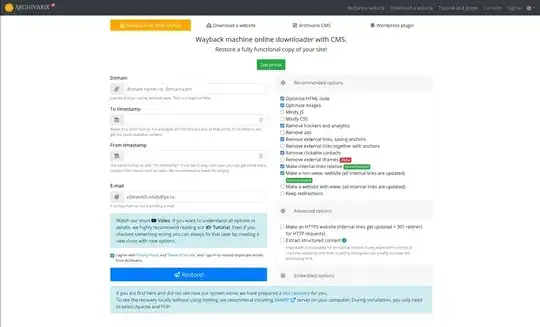

I want to get all the files for a given website at the Internet Archive’s Wayback Machine. Reasons might include:

- The original author did not archived his own website and it is now offline, I want to make a public cache from it.

- I am the original author of some website and lost some content. I want to recover it.

- …

How do I do that?

Taking into consideration that the Internet Archive’s Wayback Machine has its own special quirks: Webpage links are not pointing to the archive itself, but to a web page that might no longer be there. JavaScript is used client-side to update the links, but a trick like a recursive wget won't work.