I suggest you to read the FAQ

Here is a quote from WinHTTrack website:

Question: Some sites are captured very well, other aren't. Why?

Answer: There are several reasons (and solutions) for a mirror to fail. Reading the log

files (ans this FAQ!) is generally a VERY good idea to figure out what

occured.

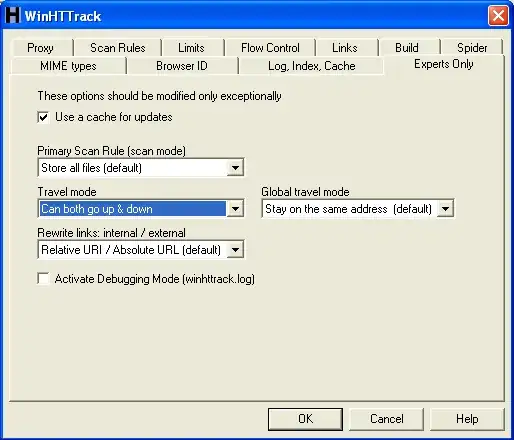

Links within the site refers to external links, or links located in another (or upper) directories, not captured by default - the use

of filters is generally THE solution, as this is one of the powerful

option in HTTrack. See the above questions/answers.

Website 'robots.txt' rules forbide access to several website parts - you can disable them, but only with great care!

HTTrack is filtered (by its default User-agent IDentity) - you can change the Browser User-Agent identity to an anonymous one (MSIE,

Netscape..) - here again, use this option with care, as this measure

might have been put to avoid some bandwidth abuse (see also the abuse

faq!)

There are cases, however, that can not be (yet) handled:

Flash sites - no full support

Intensive Java/Javascript sites - might be bogus/incomplete

Complex CGI with built-in redirect, and other tricks - very complicated to handle, and therefore might cause problems

Parsing problem in the HTML code (cases where the engine is fooled, for example by a false comment (

comment (-->) detected. Rare cases, but might occur. A bug report is

then generally good!

Note: For some sites, setting "Force old HTTP/1.0 requests" option can

be useful, as this option uses more basic requests (no HEAD request

for example). This will cause a performance loss, but will increase

the compatibility with some cgi-based sites.

PD. There are many reasons to website cant be captured 100% i think in SuperUser we are very enthusiast but we wont to make reverse enginerring to a website to discovery which system is running from behind(It's my oppinion).