The FAT32 Master Boot Record area is probably the most susceptible to abuse, since on a logical level it always needs to be in the same place. (Perhaps this is handled by the soft-remapping of bad sectors, but I am somewhat skeptical that this is implemented on all hardware.) So you could run sfdisk in a loop and see if you can wreck it that way.

But I am going to beg you to do whatever you can to improve hardware reliability, instead of trying to handle bad hardware in software. The problem that is that SD cards fail in all kinds of weird ways. They become unreadable, they become unwriteable, the give you bad data, they time out during operations, etc. Trying to predict all the ways a card can fail is very difficult.

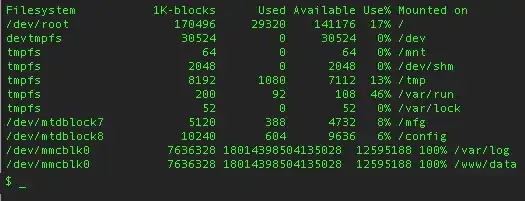

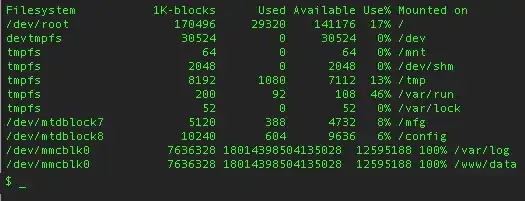

Here's one of my favorite failures, "big data mode":

SD cards are commodity consumer products that are under tremendous cost pressure. Parts change rapidly and datasheets are hard to come by. Counterfeit product is not unheard of. For cheap storage they are tough to beat, but while SSDs make reliability a priority, the priority for SD cards is speed, capacity and cost (probably not in that order.)

Your first line of defense is to use a solderable eMMC part with a real datasheet from a reputable manufacturer instead of a removable SD card. Yes, they cost more per GB, but the part will be in production for a longer period of time, and at least you know what you are getting. Soldering the part down also avoids a whole host of potential problems (cards yanked out during writes, poor electrical contact, etc.) with a removable card.

If your product needs removable storage, or it's just too late to change anything, then consider either spending the extra money for "industrial" grade cards, or treat them as disposable objects. What we do (under linux) is fsck the card on boot and reformat it if any errors are reported, as reformatting is acceptable in this use case. Then we fsck it again. If it still reports errors after reformatting, we RMA it and replace the hardware with a newer variant that uses eMMC.

Good luck!