The resolution of the 4k display is not going to be your problem, the problem is related to whatever software you are using.

A 4096 x 2160 image (equivalent to your 4k display) would be 4096 x 2160 x 4 (RGBA) ~= 34MB. You are outputting this amount of data 60 times per second, but you will only have a couple of buffers for the display.

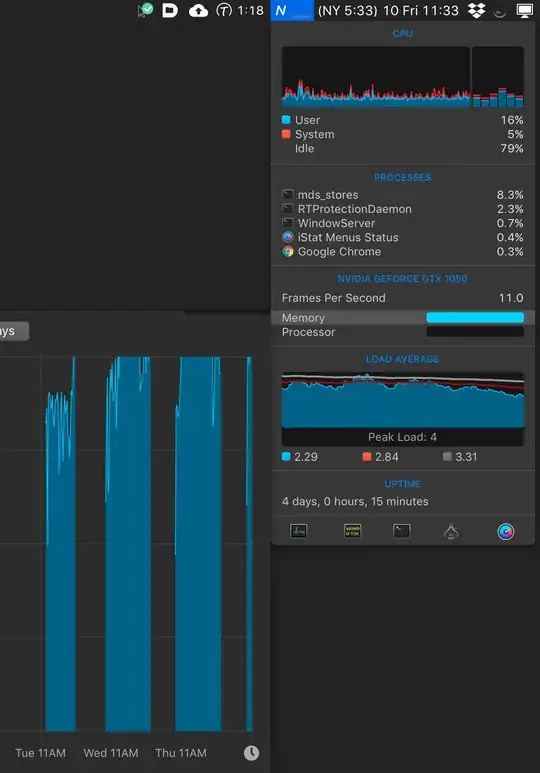

Assuming a couple of buffers that still means your display is less than 100MB and the question becomes just how bad the programs you are using are using up graphics RAM.

The card needs to have the internal bandwidth and outputs capable to drive a 4k display but that is proven by the fact that you can plug it in in the first place. You have enough memory and the outputs are fast enough.

It's the programs doing their own rendering and compositing before passing it to the graphics card that are eating up your VRAM.

Indeed, most modern programs rely much more heavily on the graphics card than they used to and it could be this peculiar for of "bloat" that is your issue.

If you are finding that 2GB simply isn't enough for your current use case then yes, more VRAM is needed.