I think I'm getting slowness due to running out of memory and hitting swap. I've watched the hardfaults/sec in Resource Monitor and it's often over 1000. This is an issue generally during recompilations, but also when my source code editor does something intensive.

But that 1000 just an absolute number, and I'm unsure whether that's my issue or not. SSD drives have fast random access so 1000 may be fine.

If I understand correctly, when a program accesses something not in main memory, it page faults, and retrieves that page from the disk.

It presumably then gives up control to another process.

So in theory, one could get lots of page faults without it affecting throughput significantly, if there are other processes ready to run.

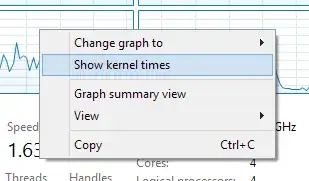

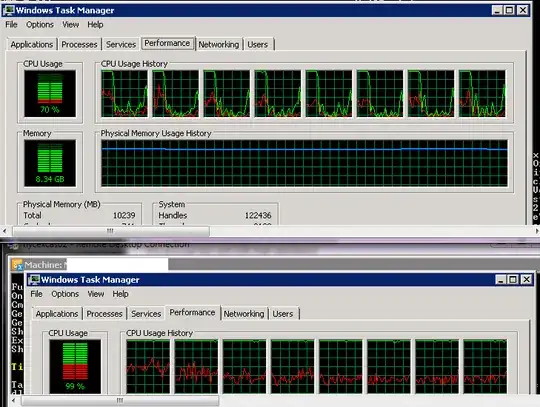

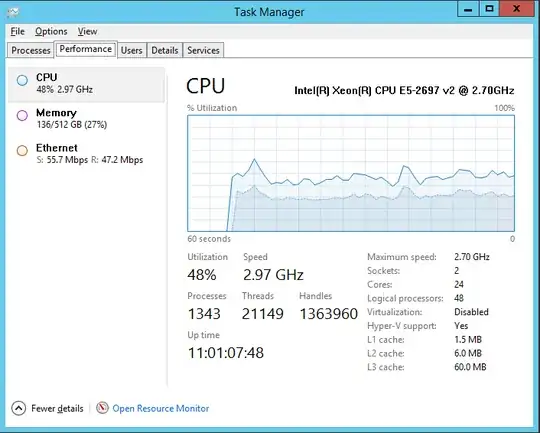

So what I really want to know is, what percentage of CPU time is where the processor is idle but there is at least one process waiting on swap?

Is there anything that produces that figure? I think that figure will give a good idea of how much a RAM upgrade would help. Alternatively, is there another way to measure this that's more informative that number of page faults per second?